Overview

Google Cloud Platform(GCP) GPU 서버 셋팅 방법에 대해서 알아보려고 한다.

사용할 GPU는 NVIDIA A100 이다.

아래의 Github에 예제 소스코드가 있다.

https://github.com/somaz94/terraform-infra-gcp/tree/main/project/somaz-ai-project

📅 관련 글

2023.04.06 - [GCP] - GCP란? - 서비스 계정 & Project 생성 / SDK(gcloud) 설치

2023.04.06 - [GCP] - GCP IAM이란?

2023.04.12 - [GCP] - GCP - SDK(gcloud) 계정 2개 등록하기

2023.05.05 - [GCP] - GCP vs AWS 리소스 비교

2023.05.19 - [GCP] - GCP BigQuery란? & Data Warehouse

2023.09.23 - [GCP] - BigQuery와 DataFlow를 활용한 Data ETL(GCP)

2023.10.03 - [GCP] - Shared VPC를 사용하여 GKE 클러스터 생성시 IAM 설정

2023.12.18 - [GCP] - GCP를 활용한 데이터 자동화(MongoDB, CloudSQL, GA, Dune)

2024.01.20 - [GCP] - Terraform 으로 GCS 생성후 Cloud CDN 생성(GCP)

2024.03.04 - [GCP] - GCP에서 딥러닝을 위한 GPU VM 서버 만들기(GCP)

2024.04.24 - [Migration] - AWS에서 GCP로 마이그레이션하는 방법 및 고려사항

GCP GPU 서버 셋팅 방법

먼저 Compute Engine 생성에 대한 설명은 아래의 링크를 참고하길 바란다.

2023.10.08 - [GCP] - 공유 VPC를 사용하여 GKE 클러스터 생성시 IAM 설정

공유 VPC를 사용하여 GKE 클러스터 생성시 IAM 설정

Overview 서로 다른 프로젝트에서 공유 VPC를 사용하는 2개의 Google Kubernetes Engine(GKE) 클러스터를 만드는 방법에 대해서 알아보려고 한다. 공유 VPC란(Share VPC)? 공유 VPC는 여러 프로젝트에 리소스를 공

somaz.tistory.com

GPU 서버에서 사용할 서비스는 아래와 같다.

https://github.com/AUTOMATIC1111/stable-diffusion-webui

https://github.com/bmaltais/kohya_ss

https://github.com/comfyanonymous/ComfyUI

1. 사용할 GPU 확인

사용할 GPU 플랫폼은 아래의 링크에서 확인가능하다.

https://cloud.google.com/compute/docs/gpus?hl=ko

GPU 플랫폼 | Compute Engine 문서 | Google Cloud

의견 보내기 GPU 플랫폼 컬렉션을 사용해 정리하기 내 환경설정을 기준으로 콘텐츠를 저장하고 분류하세요. Compute Engine은 가상 머신(VM) 인스턴스에 추가할 수 있는 그래픽 처리 장치(GPU)를 제공

cloud.google.com

2. GPU 사용가능한 Region/Zone 확인

특정 Region의 Zone 별로 사용가능한 GPU가 다르다. NVIDIA A100 기준으로 설명하려고 한다.

`asia-northeast3(Seoul) Region`에서 NVIDIA A100 40GB 사용가능한 Zone이다.

gcloud compute accelerator-types list --filter="name:nvidia-tesla-a100 AND zone:asia-northeast3"

NAME ZONE DESCRIPTION

nvidia-tesla-a100 asia-northeast3-a NVIDIA A100 40GB

nvidia-tesla-a100 asia-northeast3-b NVIDIA A100 40GB

아래와 같이 조회하면 모든 Region의 A100이 사용가능한 Zone을 모두 확인할 수 있다.

gcloud compute accelerator-types list |grep a100

nvidia-a100-80gb us-central1-a NVIDIA A100 80GB

nvidia-tesla-a100 us-central1-a NVIDIA A100 40GB

nvidia-tesla-a100 us-central1-b NVIDIA A100 40GB

nvidia-a100-80gb us-central1-c NVIDIA A100 80GB

nvidia-tesla-a100 us-central1-c NVIDIA A100 40GB

nvidia-tesla-a100 us-central1-f NVIDIA A100 40GB

nvidia-tesla-a100 us-west1-b NVIDIA A100 40GB

nvidia-tesla-a100 us-east1-a NVIDIA A100 40GB

nvidia-tesla-a100 us-east1-b NVIDIA A100 40GB

nvidia-tesla-a100 asia-northeast1-a NVIDIA A100 40GB

nvidia-tesla-a100 asia-northeast1-c NVIDIA A100 40GB

nvidia-tesla-a100 asia-southeast1-b NVIDIA A100 40GB

nvidia-a100-80gb asia-southeast1-c NVIDIA A100 80GB

nvidia-tesla-a100 asia-southeast1-c NVIDIA A100 40GB

nvidia-a100-80gb us-east4-c NVIDIA A100 80GB

nvidia-tesla-a100 europe-west4-b NVIDIA A100 40GB

nvidia-a100-80gb europe-west4-a NVIDIA A100 80GB

nvidia-tesla-a100 europe-west4-a NVIDIA A100 40GB

nvidia-tesla-a100 asia-northeast3-a NVIDIA A100 40GB

nvidia-tesla-a100 asia-northeast3-b NVIDIA A100 40GB

nvidia-tesla-a100 us-west3-b NVIDIA A100 40GB

nvidia-tesla-a100 us-west4-b NVIDIA A100 40GB

nvidia-a100-80gb us-east7-a NVIDIA A100 80GB

nvidia-tesla-a100 us-east7-b NVIDIA A100 40GB

nvidia-a100-80gb us-east5-b NVIDIA A100 80GB

nvidia-tesla-a100 me-west1-b NVIDIA A100 40GB

nvidia-tesla-a100 me-west1-c NVIDIA A100 40GB

3. GPU Quota 해제 및 Compute Engine 선택 방법

NVIDIA A100 80GB는 기본적으로 Quota가 걸려있어서 Quota 요청을 통해 상향 신청을 해야 사용가능하다.

문서[1]를 참고하여 원하시는 A100 GPU의 GPU Quota 이름을 파악한 후, 문서[2]과 같은 방법으로 Quotas 상향 신청이 가능하다.

VM Intance 머신 유형 문서[3]를 사용할 GPU에 맞게 선택한후, 문서 [4]을 참고하여 GPU 수 조정을 하여 VM을 셋팅하여 사용해야 한다.

[1] https://cloud.google.com/compute/resource-usage?hl=ko#gpu_quota

[2] https://cloud.google.com/docs/quotas/view-manage?hl=ko#requesting_higher_quota

[3] https://cloud.google.com/compute/docs/gpus/add-remove-gpus?hl=ko#accelerator-optimized_vms

[4] https://cloud.google.com/compute/docs/gpus/add-remove-gpus?hl=ko#modify_the_gpu_count

4. GPU 가격

아래의 링크에서 확인가능하다.

https://cloud.google.com/compute/all-pricing?hl=ko

가격 책정 | Compute Engine: 가상 머신(VM) | Google Cloud

Compute Engine 가격 책정 검토

cloud.google.com

NVIDIA A100 기준으로는 아래와 같다.

a2-highgpu-2g = A100 40G 2개 한달기준 약 790만원 (1일기준 약24만원)

a2-ultragpu-2g = A100 80G 2개 한달기준 = 약 1240만원 (1일기준 약41만원)

5. Compute Engine 예시 코드

Terraform 을 사용하여 작성하였다. 전체코드는 Github를 참고하길 바란다.

https://github.com/somaz94/terraform-infra-gcp/tree/main/project/somaz-ai-project

`compute-engine.tf`

## ai_server ##

resource "google_compute_address" "ai_server_ip" {

name = var.ai_server_ip

region = var.region

}

resource "google_compute_instance" "ai_server" {

name = var.ai_server

machine_type = "a2-highgpu-2g" # a2-ultragpu-2g = A100 80G 2개 / a2-highgpu-2g = A100 40G 2개

labels = local.default_labels

zone = "${var.region}-a"

allow_stopping_for_update = true

tags = [var.nfs_client]

boot_disk {

initialize_params {

image = "ubuntu-os-cloud/ubuntu-2204-lts"

size = 100

}

}

metadata = {

ssh-keys = "somaz:${file("../../key/ai-server.pub")}"

install-nvidia-driver = "true"

}

network_interface {

network = "projects/${var.host_project}/global/networks/${var.shared_vpc}"

subnetwork = "projects/${var.host_project}/regions/${var.region}/subnetworks/${var.subnet_share}-ai-b"

access_config {

## Include this section to give the VM an external ip ##

nat_ip = google_compute_address.ai_server_ip.address

}

}

scheduling {

on_host_maintenance = "TERMINATE" # 또는 "MIGRATE" 대신 "RESTART" 사용

automatic_restart = true

preemptible = false

}

guest_accelerator {

type = "nvidia-tesla-a100" # nvidia-a100-80gb = A100 80G / nvidia-tesla-a100 = A100 40G

count = 2

}

depends_on = [google_compute_address.ai_server_ip]

}

6. GPU 서버 셋팅

OS 버전은 아래와 같다.

lsb_release -a

No LSB modules are available.

Distributor ID: Ubuntu

Description: Ubuntu 22.04.4 LTS

Release: 22.04

Codename: jammy

product 항목에 그래픽 카드 이름이 표시된다. 사용하는 그래픽 카드는 GA100 [A100 SXM4 40GB] 이다.

sudo lshw -c display

*-display:0 UNCLAIMED

description: 3D controller

product: GA100 [A100 SXM4 40GB]

vendor: NVIDIA Corporation

physical id: 4

bus info: pci@0000:00:04.0

version: a1

width: 64 bits

clock: 33MHz

capabilities: msix pm bus_master cap_list

configuration: latency=0

resources: iomemory:300-2ff iomemory:500-4ff memory:81000000-81ffffff memory:3000000000-3fffffffff memory:5000000000-5001ffffff

*-display:1 UNCLAIMED

description: 3D controller

product: GA100 [A100 SXM4 40GB]

vendor: NVIDIA Corporation

physical id: 5

bus info: pci@0000:00:05.0

version: a1

width: 64 bits

clock: 33MHz

capabilities: msix pm bus_master cap_list

configuration: latency=0

resources: iomemory:400-3ff iomemory:500-4ff memory:80000000-80ffffff memory:4000000000-4fffffffff memory:5002000000-5003ffffff

설치해야할 그래픽 드라이버를 확인하기 위해 패키지를 설치한다.

sudo apt update

sudo apt install -y ubuntu-drivers-common

해당 명령어로 설치해야할 그래픽 드라이버를 확인할 수 있다.

`distro non-free recommended` 해당 메세지가 적혀있는 nvidia-driver-535를 설치할 것이다.

sudo ubuntu-drivers devices

ERROR:root:aplay command not found

== /sys/devices/pci0000:00/0000:00:05.0 ==

modalias : pci:v000010DEd000020B0sv000010DEsd0000134Fbc03sc02i00

vendor : NVIDIA Corporation

model : GA100 [A100 SXM4 40GB]

driver : nvidia-driver-545-open - distro non-free

driver : nvidia-driver-470 - distro non-free

driver : nvidia-driver-545 - distro non-free

driver : nvidia-driver-525-server - distro non-free

driver : nvidia-driver-535-server - distro non-free

driver : nvidia-driver-450-server - distro non-free

driver : nvidia-driver-535-server-open - distro non-free

driver : nvidia-driver-525 - distro non-free

driver : nvidia-driver-535 - distro non-free recommended

driver : nvidia-driver-470-server - distro non-free

driver : nvidia-driver-525-open - distro non-free

driver : nvidia-driver-535-open - distro non-free

driver : xserver-xorg-video-nouveau - distro free builtin

필요한 패키지를 전부 설치해준다. (nvidia-smi, cuda11.5, cudnn8.6)

sudo apt-get update

sudo apt-get install -y apt-transport-https ca-certificates curl nfs-common

sudo apt-get install -y nvidia-driver-535

sudo apt-get install -y wget git python3 python3-venv python3-pip python3-tk libgl1 libglib2.0-0

sudo apt-get install -y nvidia-cuda-toolkit

cudnn 설치 (회원가입 후 아래의 링크에서 원하는 버전 설치한다.)

tar xvf cudnn-linux-x86_64-8.6.0.163_cuda11-archive.tar.xz

cd cudnn-linux-x86_64-8.6.0.163_cuda11-archive.tar.xz

# 헤더 파일 복사

sudo cp include/cudnn*.h /usr/include

# 라이브러리 파일 복사

sudo cp lib/libcudnn* /usr/lib/x86_64-linux-gnu

# 권한 설정 및 라이브러리 캐시 업데이트

sudo chmod a+r /usr/include/cudnn*.h /usr/lib/x86_64-linux-gnu/libcudnn*

sudo ldconfig

그래픽카드를 확인해 본다.

sudo nvidia-smi

Wed Feb 28 07:35:44 2024

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 535.161.07 Driver Version: 535.161.07 CUDA Version: 12.2 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 NVIDIA A100-SXM4-40GB Off | 00000000:00:04.0 Off | 0 |

| N/A 29C P0 58W / 400W | 0MiB / 40960MiB | 0% Default |

| | | Disabled |

+-----------------------------------------+----------------------+----------------------+

| 1 NVIDIA A100-SXM4-40GB Off | 00000000:00:05.0 Off | 0 |

| N/A 30C P0 58W / 400W | 0MiB / 40960MiB | 27% Default |

| | | Disabled |

+-----------------------------------------+----------------------+----------------------+

+---------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=======================================================================================|

| No running processes found |

+---------------------------------------------------------------------------------------+

cuda 확인

nvcc --version

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2021 NVIDIA Corporation

Built on Thu_Nov_18_09:45:30_PST_2021

Cuda compilation tools, release 11.5, V11.5.119

Build cuda_11.5.r11.5/compiler.30672275_0

cudnn 테스트

cat <<EOF > cudnn_test.cpp

#include <cudnn.h>

#include <iostream>

int main() {

cudnnHandle_t cudnn;

cudnnCreate(&cudnn);

std::cout << "CuDNN version: " << CUDNN_VERSION << std::endl;

cudnnDestroy(cudnn);

return 0;

}

EOF

# 컴파일

nvcc -o cudnn_test cudnn_test.cpp -lcudnn

# 실행

./cudnn_test

CuDNN version: 8600

(선택) nvidia-docker 셋팅방법

sudo apt install docker.io

distribution=$(. /etc/os-release;echo $ID$VERSION_ID) \\

&& curl -s -L <https://nvidia.github.io/nvidia-docker/gpgkey> | sudo apt-key add - \\

&& curl -s -L <https://nvidia.github.io/nvidia-docker/$distribution/nvidia-docker.list> | sudo tee /etc/apt/sources.list.d/nvidia-docker.list

sudo apt update

sudo apt-get install -y nvidia-docker2

sudo systemctl restart docker

# test

sudo docker run --rm --gpus all ubuntu:18.04 nvidia-smi

Wed Feb 28 09:17:32 2024

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 535.161.07 Driver Version: 535.161.07 CUDA Version: 12.2 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 NVIDIA A100-SXM4-40GB Off | 00000000:00:04.0 Off | 0 |

| N/A 26C P0 52W / 400W | 0MiB / 40960MiB | 0% Default |

| | | Disabled |

+-----------------------------------------+----------------------+----------------------+

| 1 NVIDIA A100-SXM4-40GB Off | 00000000:00:05.0 Off | 0 |

| N/A 27C P0 51W / 400W | 0MiB / 40960MiB | 0% Default |

| | | Disabled |

+-----------------------------------------+----------------------+----------------------+

+---------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=======================================================================================|

| No running processes found |

+---------------------------------------------------------------------------------------+

7. Application 셋팅

GPU 서버에서 사용할 서비스는 아래와 같다.

- https://github.com/AUTOMATIC1111/stable-diffusion-webui

- https://github.com/bmaltais/kohya_ss

- https://github.com/comfyanonymous/ComfyUI

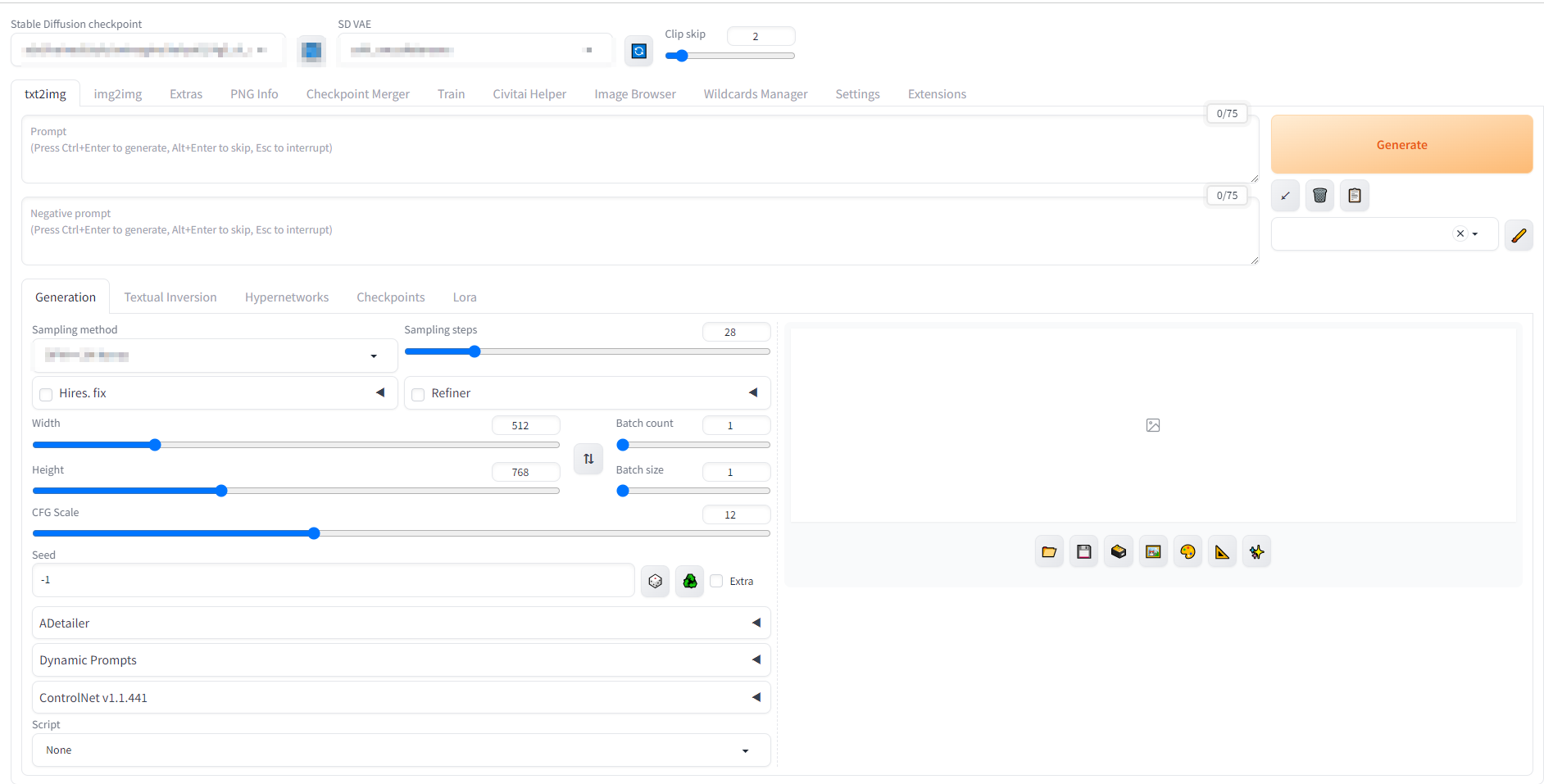

Stable Diffusion

wget -q <https://raw.githubusercontent.com/AUTOMATIC1111/stable-diffusion-webui/master/webui.sh>

chmod +x webui.sh

# Run 127.0.0.1 listen

./webui.sh

# Run 0.0.0.0 listen

./webui.sh --listen

...

################################################################

Install script for stable-diffusion + Web UI

Tested on Debian 11 (Bullseye), Fedora 34+ and openSUSE Leap 15.4 or newer.

################################################################

################################################################

Running on nerdystar user

################################################################

################################################################

Create and activate python venv

################################################################

################################################################

Launching launch.py...

################################################################

Cannot locate TCMalloc (improves CPU memory usage)

Python 3.10.12 (main, Nov 20 2023, 15:14:05) [GCC 11.4.0]

Version: v1.7.0

Commit hash: cf2772fab0af5573da775e7437e6acdca424f26e

Launching Web UI with arguments: --listen

no module 'xformers'. Processing without...

no module 'xformers'. Processing without...

No module 'xformers'. Proceeding without it.

Style database not found: /home/somaz/stable-diffusion-webui/styles.csv

Loading weights [6ce0161689] from /home/somaz/stable-diffusion-webui/models/Stable-diffusion/v1-5-pruned-emaonly.safetensors

Running on local URL:

To create a public link, set `share=True` in `launch()`.

Startup time: 9.6s (prepare environment: 1.8s, import torch: 3.4s, import gradio: 1.0s, setup paths: 1.0s, initialize shared: 0.3s, other imports: 0.7s, load scripts: 0.6s, create ui: 0.7s, gradio launch: 0.2s).

Creating model from config: /home/somaz/stable-diffusion-webui/configs/v1-inference.yaml

Applying attention optimization: Doggettx... done.

Model loaded in 3.4s (load weights from disk: 1.0s, create model: 0.5s, apply weights to model: 1.6s, calculate empty prompt: 0.1s).

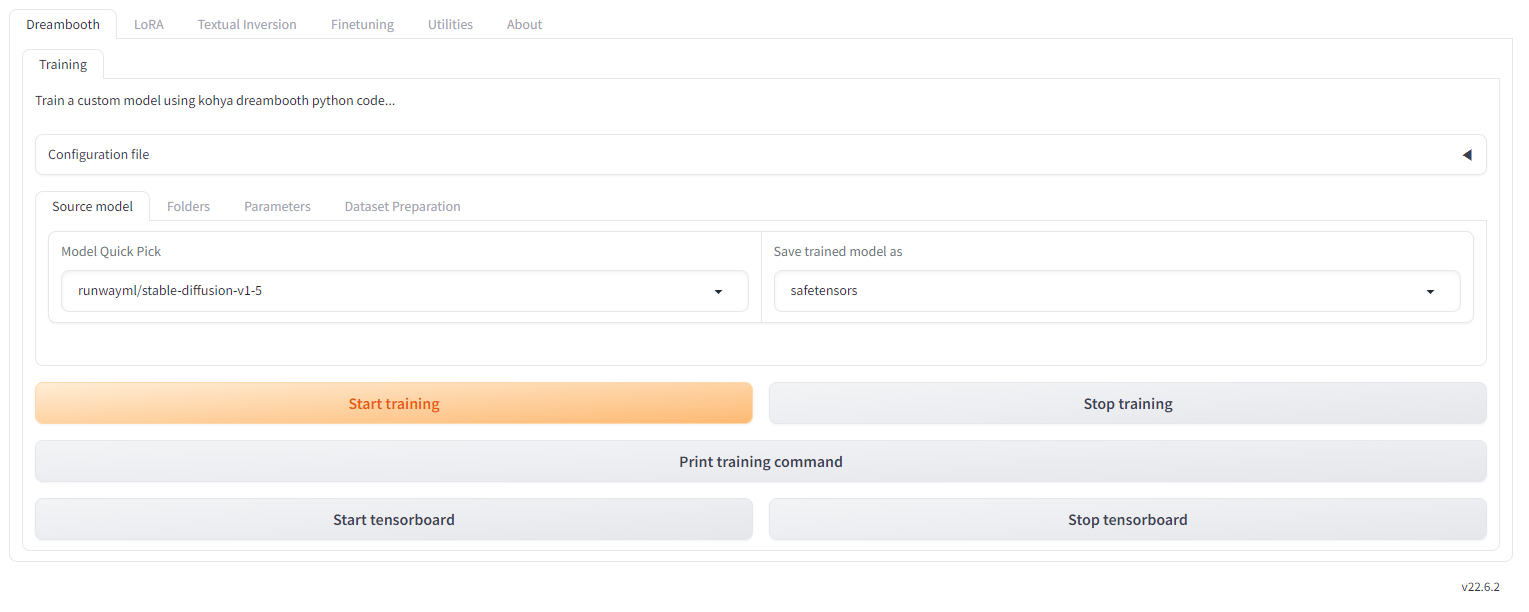

Kohya_ss

export LD_LIBRARY_PATH=/usr/lib/wsl/lib/

git clone <https://github.com/bmaltais/kohya_ss.git>

cd kohya_ss

chmod +x ./setup.sh

# Setup

./setup.sh

...

Skipping git operations.

Ubuntu detected.

Python TK found...

Switching to virtual Python environment.

03:34:28-808661 INFO Installing python dependencies. This could take a few minutes as it downloads files.

03:34:28-813026 INFO If this operation ever runs too long, you can rerun this script in verbose mode to check.

03:34:28-814191 INFO Version: v22.6.2

03:34:28-815351 INFO Python 3.10.12 on Linux

03:34:28-816879 INFO Installing modules from requirements_linux.txt...

03:34:28-818034 INFO Installing package: torch==2.0.1+cu118 torchvision==0.15.2+cu118 --extra-index-url <https://download.pytorch.org/whl/cu118>

03:36:43-638653 INFO Installing package: xformers==0.0.21 bitsandbytes==0.41.1

03:37:00-382834 INFO Installing package: tensorboard==2.14.1 tensorflow==2.14.0

03:37:46-456089 INFO Installing modules from requirements.txt...

03:37:46-457608 INFO Installing package: accelerate==0.25.0

03:37:49-715838 INFO Installing package: aiofiles==23.2.1

03:37:50-767395 INFO Installing package: altair==4.2.2

03:37:58-634632 INFO Installing package: dadaptation==3.1

03:38:04-154425 INFO Installing package: diffusers[torch]==0.25.0

03:38:10-102742 INFO Installing package: easygui==0.98.3

03:38:12-237355 INFO Installing package: einops==0.7.0

03:38:14-672360 INFO Installing package: fairscale==0.4.13

03:38:24-729842 INFO Installing package: ftfy==6.1.1

03:38:26-519145 INFO Installing package: gradio==3.50.2

03:38:38-588672 INFO Installing package: huggingface-hub==0.20.1

03:38:40-795757 INFO Installing package: invisible-watermark==0.2.0

03:38:45-053517 INFO Installing package: lion-pytorch==0.0.6

03:38:46-922057 INFO Installing package: lycoris_lora==2.0.2

03:38:56-068025 INFO Installing package: omegaconf==2.3.0

03:38:59-041401 INFO Installing package: onnx==1.14.1

03:39:03-322517 INFO Installing package: onnxruntime-gpu==1.16.0

03:39:12-741226 INFO Installing package: protobuf==3.20.3

03:39:15-532665 INFO Installing package: open-clip-torch==2.20.0

03:39:19-687333 INFO Installing package: opencv-python==4.7.0.68

03:39:24-698842 INFO Installing package: prodigyopt==1.0

03:39:27-033306 INFO Installing package: pytorch-lightning==1.9.0

03:39:32-503645 INFO Installing package: rich==13.7.0

03:39:35-219998 INFO Installing package: safetensors==0.4.2

03:39:37-597958 INFO Installing package: timm==0.6.12

03:39:40-652363 INFO Installing package: tk==0.1.0

03:39:43-141926 INFO Installing package: toml==0.10.2

03:39:46-008144 INFO Installing package: transformers==4.36.2

03:39:55-391264 INFO Installing package: voluptuous==0.13.1

03:39:57-729494 INFO Installing package: wandb==0.15.11

03:40:04-541910 INFO Installing package: scipy==1.11.4

03:40:11-364765 INFO Installing package: -e .

03:40:18-252548 INFO Configuring accelerate...

03:40:18-253988 WARNING Could not automatically configure accelerate. Please manually configure accelerate with the option in the menu or with: accelerate config.

Exiting Python virtual environment.

Setup finished! Run ./gui.sh to start.

Please note if you'd like to expose your public server you need to run ./gui.sh --share

# Run 127.0.0.1 listen

./gui.sh

# Run 0.0.0.0 listen

./gui.sh --listen=0.0.0.0 --headless

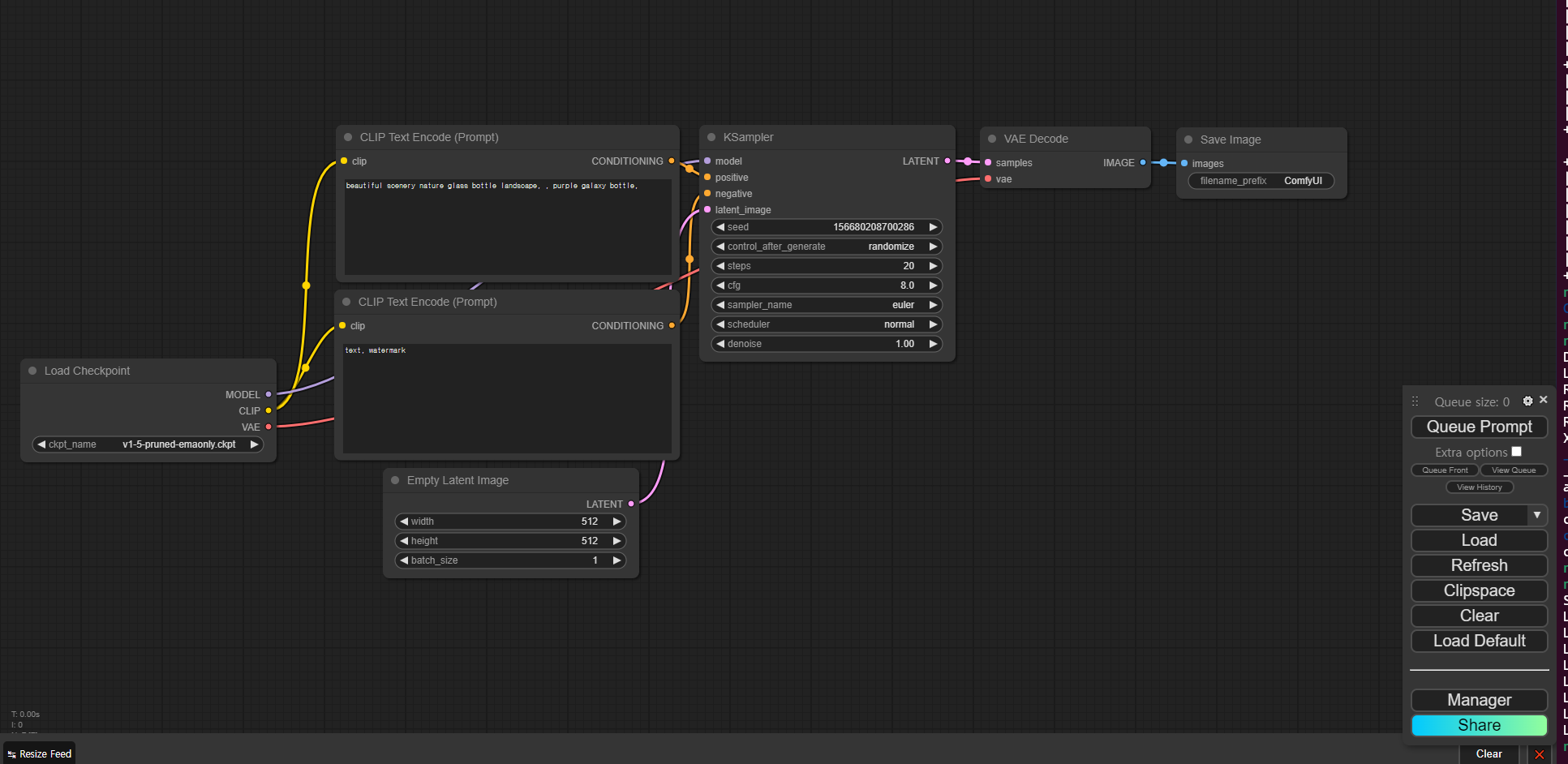

ComfyUI

NVIDIA GPU 사용자

- PyTorch 설치:

- 안정 버전: pip install torch torchvision torchaudio --extra-index-url

- nightly 버전: pip install --pre torch torchvision torchaudio --index-url

git clone <https://github.com/comfyanonymous/ComfyUI.git>

cd ComfyUI

pip install -r requirements.txt

pip install torch torchvision torchaudio --extra-index-url https://download.pytorch.org/whl/cu121

# Run 127.0.0.1 listen

python3 main.py

# Run 0.0.0.0 listen

python3 main.py --listen 0.0.0.0

A100 GPU 종류 정리 (SXM4 vs PCIe 차이점)

| 모델 | 폼팩터 | 메모리 | 대역폭 | 용도 |

| A100 SXM4 | NVLink | 40GB / 80GB | 2TB/s+ | 고성능 클러스터 구성 (HPC, AI 학습) |

| A100 PCIe | PCIe | 40GB / 80GB | 1.6TB/s | 범용 GPU 서버 구성 (Inference, 영상처리 등) |

- GCP의 경우 주로 PCIe 타입을 제공.

- SXM4는 고성능 전용 (온프레미스 구축 시 고려)

VM 권장 설정 추가 (운영 팁)

GPU 서버는 비용이 높기 때문에 효율적 사용이 중요하다. 아래 설정들도 함께 추천한다.

- Preemptible VM 옵션 활용 (Spot 인스턴스 대체 가능, 비용 절감 효과)

- Custom VM Type 활용 (RAM을 딱 맞게 조절해서 비용 최적화)

- Local SSD 활용 (Scratch Disk 빠른 데이터 읽기/쓰기용)

- Startup Script로 드라이버 자동 설치 (자동화)

예시 (`startup-script` 로 드라이버 자동 설치)

#!/bin/bash sudo apt-get update sudo apt-get install -y nvidia-driver-535

GPU 할당 정책 (MIG - Multi Instance GPU)

A100은 1개의 GPU를 여러 개로 쪼개서 할당할 수 있는 MIG 기능을 지원한다.

GCP에서는 `a2-megagpu` 타입에서 MIG 활용 가능 (AI Inference 서버 운영 시 유리)

| VM 타입 | GPU 수 | MIG 인스턴스 수 |

| a2-megagpu-16g | 16 | 최대 112개 |

| a2-ultragpu-1g | 1 | 최대 7개 |

Grafana + NVIDIA DCGM Exporter 연동 (모니터링)

장기 운영 시 필수 모니터링 항목

- GPU Utilization

- Memory Usage

- Temperature

- Power Usage

성능 벤치마크 툴 추천

GPU 성능 테스트는 아래 툴들이 많이 쓰인다.

- nvidia-smi dmon (실시간 모니터링)

- cuda-samples (CUDA 벤치마크)

- tensorflow_benchmark (AI 학습 성능 측정)

- fio (Disk I/O 성능 확인)

주의사항 (Quota와 Region 제한)

- GCP는 신규 계정일수록 GPU Quota가 매우 제한적입니다.

- Quota 상향 신청은 사전 계획 필수 (최소 1주일 소요될 수도 있음)

- 일부 Region은 GPU 재고 부족으로 할당 실패하는 경우도 있음

마무리

- GCP에서 GPU 서버를 셋팅하는 과정은 단순히 VM을 만드는 것이 아니라, Region/Zone 선택부터 드라이버 설치, 성능 튜닝, 모니터링까지 많은 고려사항이 있다.

- 이번 정리글을 통해 GPU 서버 셋팅의 A to Z를 이해하고, 실제로 필요한 작업을 빠짐없이 준비할 수 있기를 바란다.

- 특히 Terraform 기반으로 IaC(Infrastructure as Code)를 함께 구성하면, 편의성과 확장성까지 모두 갖춘 GPU 환경을 손쉽게 운영할 수 있다.

Reference

https://cloud.google.com/compute/docs/gpus?hl=ko

https://github.com/somaz94/terraform-infra-gcp/tree/main/project/somaz-ai-project

https://cloud.google.com/blog/products/gcp/announcing-gpus-for-google-cloud-platform/?hl=en

https://cloud.google.com/compute/resource-usage?hl=ko#gpu_quota

https://cloud.google.com/docs/quotas/view-manage?hl=ko#requesting_higher_quota

https://cloud.google.com/compute/docs/gpus/add-remove-gpus?hl=ko#accelerator-optimized_vms

https://cloud.google.com/compute/docs/gpus/add-remove-gpus?hl=ko#modify_the_gpu_count

https://cloud.google.com/compute/all-pricing?hl=ko

https://github.com/AUTOMATIC1111/stable-diffusion-webui

https://github.com/bmaltais/kohya_ss

https://github.com/comfyanonymous/ComfyUI

https://webnautes.tistory.com/1843

NVIDIA 공식 GPU 최적화 가이드: https://docs.nvidia.com/datacenter/tesla/index.html

NVIDIA Container Toolkit: https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/latest/

GPU Monitoring (DCGM): https://developer.nvidia.com/dcgm

'GCP' 카테고리의 다른 글

| Terraform 으로 GCS 생성후 Cloud CDN 생성(GCP) (0) | 2024.01.22 |

|---|---|

| GCP를 활용한 데이터 자동화(MongoDB, CloudSQL, GA, Dune) (2) | 2023.12.22 |

| Shared VPC를 사용하여 GKE 클러스터 생성시 IAM 설정 (0) | 2023.10.08 |

| BigQuery와 DataFlow를 활용한 Data ETL(GCP) (0) | 2023.10.02 |

| GCP BigQuery란? & Data Warehouse (0) | 2023.05.21 |