Overview

이번 글에서는 Rook-Ceph를 사용하여 Kubernetes 클러스터에서 Ceph 스토리지를 배포 및 운영하는 전체 과정을 다루었다.

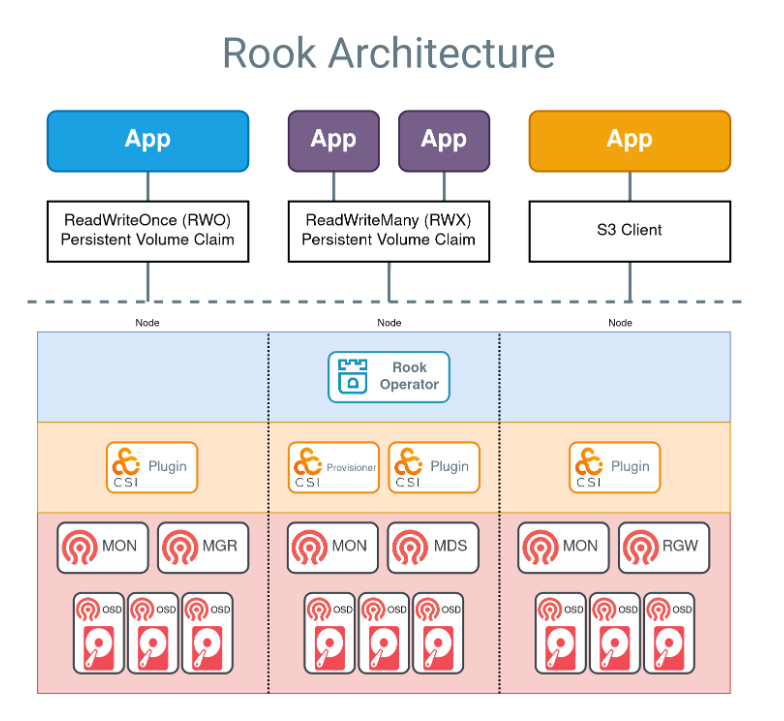

Rook은 Kubernetes 네이티브 방식으로 Ceph를 관리할 수 있는 오픈소스 오케스트레이션 툴로, 복잡한 분산 스토리지 시스템을 Kubernetes 리소스로 추상화하여 쉽게 설치하고 운영할 수 있도록 도와준다.

이번 구성에서는 Google Cloud VM 환경에서 Kubespray로 Kubernetes 클러스터를 구축한 뒤, Rook Operator 및 Rook-Ceph 클러스터를 Helm Chart 기반으로 설치하였다. Ceph OSD를 위해 별도의 스토리지 노드를 구성하고, Rook의 values.yaml을 적절히 커스터마이징하여 MON/MGR/OSD/MDS/Object Store를 설정하고 설치하였다.

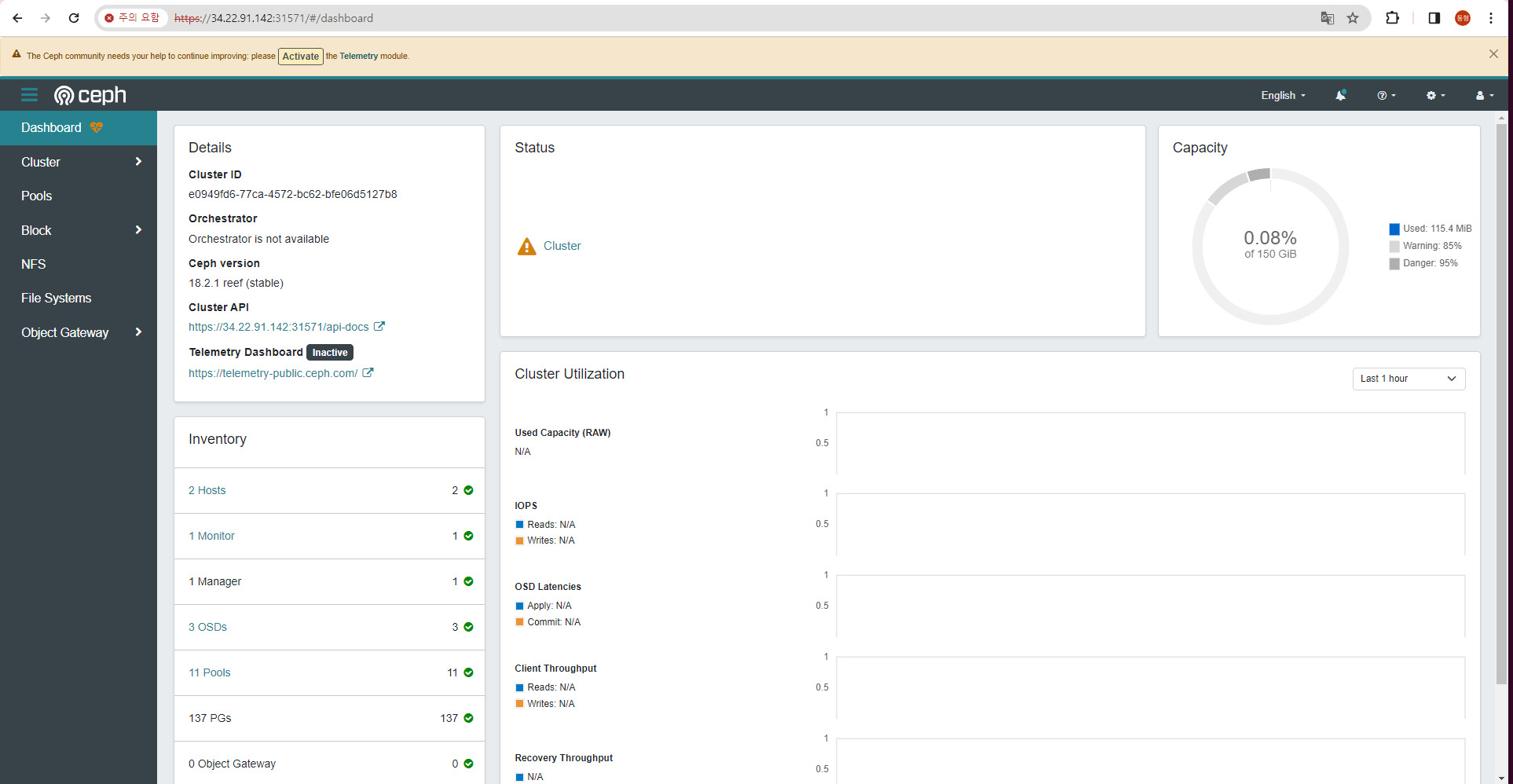

이후 RBD 기반의 StorageClass를 통해 Pod에서 Ceph의 블록 스토리지를 사용할 수 있도록 구성하였고, 마지막에는 Ceph Dashboard까지 활성화하여 웹 UI 기반의 클러스터 모니터링 기능도 확인하였다.

Cephadm 이란?

Cephadm은 Ceph의 최신 배포 및 관리 도구로, Ceph Octopus 릴리즈부터 도입되었다.

Ceph 클러스터를 배포, 구성, 관리하고 확장하는 작업을 단순화하기 위해 설계되었다. 단일 명령으로 클러스터를 부트스트랩하고, 컨테이너 기술을 사용하여 Ceph 서비스를 배포한다.

Cephadm은 Ansible, Rook 또는 Salt와 같은 외부 구성 도구에 의존하지 않는다. 그러나 이러한 외부 구성 도구를 사용하면 cephadm 자체에서 수행되지 않는 작업을 자동화할 수 있다.

- https://github.com/ceph/cephadm-ansible

- https://rook.io/docs/rook/v1.10/Getting-Started/intro/

- https://github.com/ceph/ceph-salt

📅 관련 글

2022.07.29 - [Open Source Software] - Ceph 란?

2024.02.20 - [Open Source Software] - Cephadm-ansible이란?

Rook-Ceph 이란?

Ceph는 수년간의 프로덕션 배포를 통해 블록 스토리지 , 객체 스토리지 및 공유 파일 시스템을 위한 확장성이 뛰어난 분산 스토리지 솔루션이다.

Rook은 Kubernetes 상에서 분산 스토리지 시스템을 배포, 운영, 관리하기 위한 오픈 소스 오케스트레이션 프레임워크이다. Ceph를 Kubernetes 클러스터 내에서 자동으로 관리하고, 스토리지 솔루션을 클라우드 네이티브 환경에 통합하는 데 중점을 두고 있다. Rook을 사용하면 Ceph와 같은 복잡한 분산 스토리지 시스템을 쉽게 배포하고 관리할 수 있으며, 동시에 Kubernetes의 자동화 및 관리 기능을 활용할 수 있다.

K8S 설치

아래의 링크를 참고바란다. 주의할점은 Storage Node가 Memory 32G 이상은 되어야 한다.

2024.02.02 - [Container Orchestration/Kubernetes] - Kubernetes 클러스터 구축하기(kubespray 2024v.)

Kubernetes 클러스터 구축하기(kubespray 2024v.)

Overview Kubespary를 사용해서 Kubernetes를 설치해본다. 그리고 Worker Node를 한대 추가해 조인까지 진행해본다. Kubespray 설치 2024.01.22 기준이다. 시스템 구성 OS : Ubuntu 20.04 LTS(Focal) Cloud: Google Compute Engine M

somaz.tistory.com

| Master Node(Control Plane) | IP | CPU | Memory |

| test-server | 10.77.101.18 | 16 | 32G |

| Worker Node | IP | CPU | Memory |

| test-server-agent | 10.77.101.12 | 16 | 32G |

| Worker Node | IP | CPU | Memory |

| test-server-storage | 10.77.101.16 | 16 | 32G |

아래의 내용만 추가하면 된다.

## test_server_storage ##

resource "google_compute_address" "test_server_storage_ip" {

name = var.test_server_storage_ip

}

resource "google_compute_instance" "test_server_storage" {

name = var.test_server_storage

machine_type = "n2-standard-8"

labels = local.default_labels

zone = "${var.region}-a"

allow_stopping_for_update = true

tags = [var.kubernetes_client]

boot_disk {

initialize_params {

image = "ubuntu-os-cloud/ubuntu-2004-lts"

size = 50

}

}

attached_disk {

source = google_compute_disk.additional_test_storage_1_pd_balanced.self_link

device_name = google_compute_disk.additional_test_storage_1_pd_balanced.name

}

attached_disk {

source = google_compute_disk.additional_test_storage_2_pd_balanced.self_link

device_name = google_compute_disk.additional_test_storage_2_pd_balanced.name

}

attached_disk {

source = google_compute_disk.additional_test_storage_3_pd_balanced.self_link

device_name = google_compute_disk.additional_test_storage_3_pd_balanced.name

}

metadata = {

ssh-keys = "somaz:${file("~/.ssh/id_rsa_somaz94.pub")}"

}

network_interface {

network = var.shared_vpc

subnetwork = "${var.subnet_share}-mgmt-a"

access_config {

## Include this section to give the VM an external ip ##

nat_ip = google_compute_address.test_server_storage_ip.address

}

}

depends_on = [

google_compute_address.test_server_storage_ip,

google_compute_disk.additional_test_storage_1_pd_balanced,

google_compute_disk.additional_test_storage_2_pd_balanced,

google_compute_disk.additional_test_storage_3_pd_balanced

]

}

resource "google_compute_disk" "additional_test_storage_1_pd_balanced" {

name = "test-storage-disk-1"

type = "pd-balanced"

zone = "${var.region}-a"

size = 50

}

resource "google_compute_disk" "additional_test_storage_2_pd_balanced" {

name = "test-storage-disk-2"

type = "pd-balanced"

zone = "${var.region}-a"

size = 50

}

resource "google_compute_disk" "additional_test_storage_3_pd_balanced" {

name = "test-storage-disk-3"

type = "pd-balanced"

zone = "${var.region}-a"

size = 50

}

설치는 블로그 참고하여 진행하면 된다.

k get nodes

NAME STATUS ROLES AGE VERSION

test-server Ready control-plane 55s v1.29.1

test-server-agent Ready <none> 55s v1.29.1

test-server-storage Ready <none> 55s v1.29.1

rook-ceph Operator 설치

git clone을 한다.

git clone <https://github.com/rook/rook.git>

cd ~/rook/deploy/charts/rook-ceph

cp values.yaml somaz-values.yaml

https://github.com/rook/rook/tree/master/deploy/charts/rook-ceph

helm repo add rook-release https://charts.rook.io/release

helm repo update

# local git repo로 설치

helm install --create-namespace --namespace rook-ceph rook-ceph \\

. -f somaz-values.yaml

# upgrade

helm upgrade --create-namespace --namespace rook-ceph rook-ceph \\

. -f somaz-values.yaml

확인

k get crd

NAME CREATED AT

bgpconfigurations.crd.projectcalico.org 2024-02-05T03:24:28Z

bgpfilters.crd.projectcalico.org 2024-02-05T03:24:28Z

bgppeers.crd.projectcalico.org 2024-02-05T03:24:28Z

blockaffinities.crd.projectcalico.org 2024-02-05T03:24:28Z

caliconodestatuses.crd.projectcalico.org 2024-02-05T03:24:28Z

cephblockpoolradosnamespaces.ceph.rook.io 2024-02-05T06:13:42Z

cephblockpools.ceph.rook.io 2024-02-05T06:13:43Z

cephbucketnotifications.ceph.rook.io 2024-02-05T06:13:42Z

cephbuckettopics.ceph.rook.io 2024-02-05T06:13:42Z

cephclients.ceph.rook.io 2024-02-05T06:13:42Z

cephclusters.ceph.rook.io 2024-02-05T06:13:42Z

cephcosidrivers.ceph.rook.io 2024-02-05T06:13:42Z

cephfilesystemmirrors.ceph.rook.io 2024-02-05T06:13:42Z

cephfilesystems.ceph.rook.io 2024-02-05T06:13:42Z

cephfilesystemsubvolumegroups.ceph.rook.io 2024-02-05T06:13:42Z

cephnfses.ceph.rook.io 2024-02-05T06:13:42Z

cephobjectrealms.ceph.rook.io 2024-02-05T06:13:42Z

cephobjectstores.ceph.rook.io 2024-02-05T06:13:42Z

cephobjectstoreusers.ceph.rook.io 2024-02-05T06:13:42Z

cephobjectzonegroups.ceph.rook.io 2024-02-05T06:13:42Z

cephobjectzones.ceph.rook.io 2024-02-05T06:13:43Z

cephrbdmirrors.ceph.rook.io 2024-02-05T06:13:42Z

clusterinformations.crd.projectcalico.org 2024-02-05T03:24:28Z

felixconfigurations.crd.projectcalico.org 2024-02-05T03:24:28Z

globalnetworkpolicies.crd.projectcalico.org 2024-02-05T03:24:28Z

globalnetworksets.crd.projectcalico.org 2024-02-05T03:24:29Z

hostendpoints.crd.projectcalico.org 2024-02-05T03:24:29Z

ipamblocks.crd.projectcalico.org 2024-02-05T03:24:29Z

ipamconfigs.crd.projectcalico.org 2024-02-05T03:24:29Z

ipamhandles.crd.projectcalico.org 2024-02-05T03:24:29Z

ippools.crd.projectcalico.org 2024-02-05T03:24:29Z

ipreservations.crd.projectcalico.org 2024-02-05T03:24:29Z

kubecontrollersconfigurations.crd.projectcalico.org 2024-02-05T03:24:29Z

networkpolicies.crd.projectcalico.org 2024-02-05T03:24:29Z

networksets.crd.projectcalico.org 2024-02-05T03:24:29Z

objectbucketclaims.objectbucket.io 2024-02-05T06:13:42Z

objectbuckets.objectbucket.io 2024-02-05T06:13:42Z

k get po -n rook-ceph

NAME READY STATUS RESTARTS AGE

rook-ceph-operator-799cf9f45-946r6 1/1 Running 0 28s

rook-ceph 설치

test-server-storage 디스크를 확인 후 초기화해준다.

lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

loop0 7:0 0 63.9M 1 loop /snap/core20/2105

loop1 7:1 0 368.2M 1 loop /snap/google-cloud-cli/207

loop2 7:2 0 40.4M 1 loop /snap/snapd/20671

loop3 7:3 0 91.9M 1 loop /snap/lxd/24061

sda 8:0 0 50G 0 disk

├─sda1 8:1 0 49.9G 0 part /

├─sda14 8:14 0 4M 0 part

└─sda15 8:15 0 106M 0 part /boot/efi

sdb 8:16 0 50G 0 disk

sdc 8:32 0 50G 0 disk

sdd 8:48 0 50G 0 disk

# 디스크 초기화

sudo wipefs -a /dev/sdb

sudo wipefs -a /dev/sdc

sudo wipefs -a /dev/sdd

`overide-values.yaml` 파일을 작성해준다.

cd ~/rook/deploy/charts/rook-ceph-cluster

cp values.yaml somaz-values.yaml

vi somaz-values.yaml

configOverride: |

[global]

mon_allow_pool_delete = true

osd_pool_default_size = 1

osd_pool_default_min_size = 1

...

# Installs a debugging toolbox deployment

toolbox:

# -- Enable Ceph debugging pod deployment. See [toolbox](../Troubleshooting/ceph-toolbox.md)

enabled: true

# -- Toolbox image, defaults to the image used by the Ceph cluster

image: #quay.io/ceph/ceph:v18.2.1

# -- Toolbox tolerations

tolerations: []

# -- Toolbox affinity

affinity: {}

...

mon:

# Set the number of mons to be started. Generally recommended to be 3.

# For highest availability, an odd number of mons should be specified.

count: 1

# The mons should be on unique nodes. For production, at least 3 nodes are recommended for this reason.

# Mons should only be allowed on the same node for test environments where data loss is acceptable.

allowMultiplePerNode: false

mgr:

# When higher availability of the mgr is needed, increase the count to 2.

# In that case, one mgr will be active and one in standby. When Ceph updates which

# mgr is active, Rook will update the mgr services to match the active mgr.

count: 1

allowMultiplePerNode: false

modules:

# Several modules should not need to be included in this list. The "dashboard" and "monitoring" modules

# are already enabled by other settings in the cluster CR.

- name: pg_autoscaler

enabled: true

...

storage: # cluster level storage configuration and selection

useAllNodes: false

useAllDevices: false

# deviceFilter:

# config:

# crushRoot: "custom-root" # specify a non-default root label for the CRUSH map

# metadataDevice: "md0" # specify a non-rotational storage so ceph-volume will use it as block db device of bluestore.

# databaseSizeMB: "1024" # uncomment if the disks are smaller than 100 GB

# osdsPerDevice: "1" # this value can be overridden at the node or device level

# encryptedDevice: "true" # the default value for this option is "false"

# # Individual nodes and their config can be specified as well, but 'useAllNodes' above must be set to false. Then, only the named

# # nodes below will be used as storage resources. Each node's 'name' field should match their 'kubernetes.io/hostname' label.

nodes:

- name: "test-server-storage"

devices:

- name: "sdb"

- name: "sdc"

- name: "sdd"

...

cephBlockPools:

- name: ceph-blockpool

# see <https://github.com/rook/rook/blob/master/Documentation/CRDs/Block-Storage/ceph-block-pool-crd.md#spec> for available configuration

spec:

failureDomain: host

replicated:

size: 1

# Enables collecting RBD per-image IO statistics by enabling dynamic OSD performance counters. Defaults to false.

# For reference: <https://docs.ceph.com/docs/master/mgr/prometheus/#rbd-io-statistics>

# enableRBDStats: true

...

cephFileSystems:

- name: ceph-filesystem

# see <https://github.com/rook/rook/blob/master/Documentation/CRDs/Shared-Filesystem/ceph-filesystem-crd.md#filesystem-settings> for available configuration

spec:

metadataPool:

replicated:

size: 1

dataPools:

- failureDomain: host

replicated:

size: 1

...

storageClass:

enabled: false

isDefault: false

name: ceph-filesystem

...

cephObjectStores:

- name: ceph-objectstore

# see <https://github.com/rook/rook/blob/master/Documentation/CRDs/Object-Storage/ceph-object-store-crd.md#object-store-settings> for available configuration

spec:

metadataPool:

failureDomain: host

replicated:

size: 1

dataPool:

failureDomain: host

erasureCoded:

dataChunks: 2

codingChunks: 1

preservePoolsOnDelete: true

gateway:

port: 80

resources:

limits:

cpu: "2000m"

memory: "2Gi"

requests:

cpu: "1000m"

memory: "1Gi"

# securePort: 443

# sslCertificateRef:

instances: 1

priorityClassName: system-cluster-critical

storageClass:

enabled: false

name: ceph-bucket

reclaimPolicy: Delete

volumeBindingMode: "Immediate"

# see <https://github.com/rook/rook/blob/master/Documentation/Storage-Configuration/Object-Storage-RGW/ceph-object-bucket-claim.md#storageclass> for available configuration

parameters:

# note: objectStoreNamespace and objectStoreName are configured by the chart

region: us-east-1

...

https://github.com/rook/rook/blob/master/deploy/charts/rook-ceph-cluster/values.yaml

helm repo add rook-release https://charts.rook.io/release

helm repo update

# local git repo로 설치

helm install --create-namespace --namespace rook-ceph rook-ceph-cluster \\

--set operatorNamespace=rook-ceph . -f somaz-values.yaml

# upgrade

helm upgrade --create-namespace --namespace rook-ceph rook-ceph-cluster \\

--set operatorNamespace=rook-ceph . -f somaz-values.yaml

설치 중 잘못되었을때 디스크를 초기화하거나 재사용하기 전에 기존의 파일 시스템이나 파티션 정보를 제거한다. 그리고 helm으로 재설치하면 된다.

만약 삭제가 잘되지 않으면 `edit` 으로 `finalizers` 제거해준다. 지우는 순서는 상관없고 모든 리소스가 지워진지 확인만 하면된다.

# ceph cluster 삭제

helm delete -n rook-ceph rook-ceph-cluster

# 리소스 삭제

k delete cephblockpool -n rook-ceph ceph-blockpool

k delete cephobjectstore -n rook-ceph ceph-objectstore

k delete cephfilesystem -n rook-ceph ceph-filesystem

k delete cephfilesystemsubvolumegroups.ceph.rook.io -n rook-ceph ceph-filesystem-csi

# ceph 관련 crd 한번에 삭제

kubectl get crd -o name | grep 'ceph.' | xargs kubectl delete

kubectl get crd -o name | grep 'objectbucket.' | xargs kubectl delete

# 오퍼레이터 삭제

helm delete -n rook-ceph rook-ceph

# 리소스 전부 삭제(확인)

kubectl -n rook-ceph delete deployment --all

kubectl -n rook-ceph delete statefulsets --all

kubectl -n rook-ceph delete daemonsets --all

kubectl -n rook-ceph delete replicaset --all

kubectl -n rook-ceph delete service --all

# 확인

k get all -n rook-ceph

No resources found in rook-ceph namespace.

# 디스크 초기화

sudo wipefs -a /dev/sdb

sudo wipefs -a /dev/sdc

sudo wipefs -a /dev/sdd

# operator 설치

cd ~/rook/deploy/charts/rook-ceph

helm install --create-namespace --namespace rook-ceph rook-ceph \\

. -f somaz-values.yaml

# ceph cluster 설치

cd ~/rook/deploy/charts/rook-ceph-cluster

helm install --create-namespace --namespace rook-ceph rook-ceph-cluster \\

--set operatorNamespace=rook-ceph . -f somaz-values.yaml

문제가 생기면 operator pod의 로그를 보면 된다.

k logs -n rook-ceph rook-ceph-operator-799cf9f45-wbxk5 | less

...

2024-02-05 06:32:19.993505 I | cephclient: writing config file /var/lib/rook/rook-ceph/rook-ceph.config

2024-02-05 06:32:19.993975 I | cephclient: generated admin config in /var/lib/rook/rook-ceph

2024-02-05 06:32:20.143283 I | op-mon: 0 of 1 expected mons are ready. creating or updating deployments without checking quorum in attempt to achieve a healthy mon cluster

2024-02-05 06:32:20.536237 I | op-mon: updating maxMonID from -1 to 0

2024-02-05 06:32:21.337162 I | op-mon: saved mon endpoints to config map map[csi-cluster-config-json:[{"clusterID":"rook-ceph","monitors":["10.233.42.134:6789"],"cephFS":{"netNamespaceFilePath":"","subvolumeGroup":"","kernelMountOptions":"","fuseMountOptions":""},"rbd":{"netNamespaceFilePath":"","radosNamespace":""},"nfs":{"netNamespaceFilePath":""},"readAffinity":{"enabled":false,"crushLocationLabels":null},"namespace":""}] data:a=10.233.42.134:6789 mapping:{"node":{"a":{"Name":"test-server-storage","Hostname":"test-server-storage","Address":"10.77.101.9"}}} maxMonId:0 outOfQuorum:]

2024-02-05 06:32:21.337386 I | op-mon: waiting for mon quorum with [a]

2024-02-05 06:32:21.541335 I | op-mon: mon a is not yet running

2024-02-05 06:32:21.541354 I | op-mon: mons running: []

2024-02-05 06:32:41.636556 I | op-mon: mons running: [a]

2024-02-05 06:32:50.900431 I | op-mon: mons running: [a]

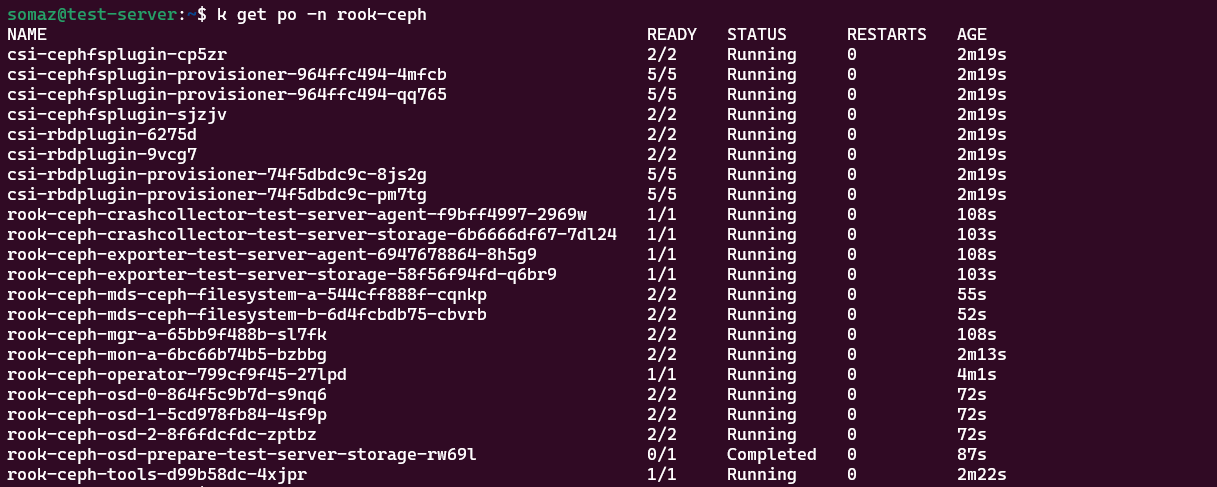

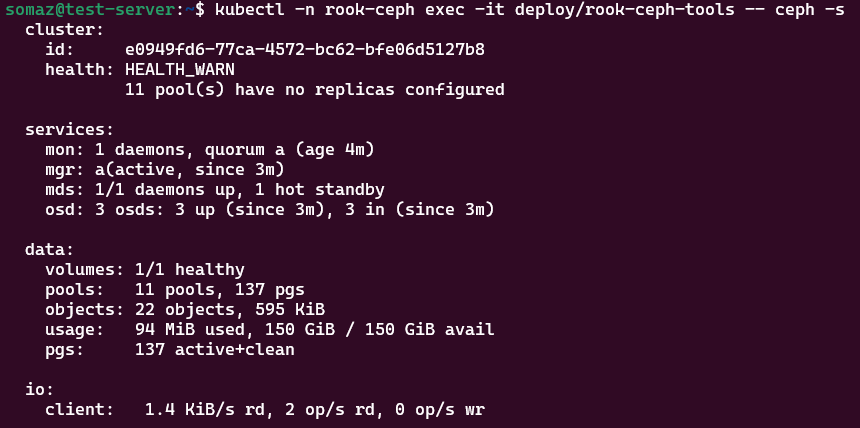

설치 확인한다. 당연히 ceph replica를 1로 했기 때문에 `1 pool(s) have no replicas configured` 해당 메세지가 뜬다. 설치되는데 시간은 최소 10분이다.

# pod 확인

k get po -n rook-ceph

NAME READY STATUS RESTARTS AGE

csi-cephfsplugin-7ldgx 2/2 Running 1 (2m39s ago) 3m26s

csi-cephfsplugin-ffmgn 2/2 Running 0 3m26s

csi-cephfsplugin-provisioner-67b5c7f475-5s659 5/5 Running 1 (2m32s ago) 3m26s

csi-cephfsplugin-provisioner-67b5c7f475-lrkpm 5/5 Running 0 3m26s

csi-rbdplugin-49jd4 2/2 Running 0 3m26s

csi-rbdplugin-9wbd7 2/2 Running 1 (2m39s ago) 3m26s

csi-rbdplugin-provisioner-657d6bc4c4-7hvw8 5/5 Running 0 3m26s

csi-rbdplugin-provisioner-657d6bc4c4-xjrjd 5/5 Running 1 (2m35s ago) 3m26s

rook-ceph-crashcollector-test-server-agent-8f59d6d99-88c49 1/1 Running 0 45s

rook-ceph-crashcollector-test-server-storage-7bc6776db5-nmwb9 1/1 Running 0 62s

rook-ceph-exporter-test-server-agent-7d5c4857dc-hb4rp 1/1 Running 0 45s

rook-ceph-exporter-test-server-storage-5f7d8858d5-hkd6w 1/1 Running 0 59s

rook-ceph-mds-ceph-filesystem-a-788f7fd4d9-gwsz6 2/2 Running 0 45s

rook-ceph-mds-ceph-filesystem-b-57f9cc8b9f-lvnf7 0/2 Pending 0 44s

rook-ceph-mgr-a-5b6c466464-22zjc 2/2 Running 0 100s

rook-ceph-mon-a-6d9d57f59d-6gk54 2/2 Running 0 2m37s

rook-ceph-operator-56d466d645-dxbhb 1/1 Running 0 10m

rook-ceph-osd-0-6444dd8b86-thzqn 2/2 Running 0 62s

rook-ceph-osd-1-7db56668c7-zh5z6 2/2 Running 0 62s

rook-ceph-osd-2-85c78fb94-pjgvz 2/2 Running 0 62s

rook-ceph-osd-prepare-test-server-storage-qhsrv 0/1 Completed 0 79s

rook-ceph-tools-5574756dc7-hw2sf 1/1 Running 0 3m51s

# storageclass 확인

k get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

ceph-block (default) rook-ceph.rbd.csi.ceph.com Delete Immediate true 3m13s

# ceph 버전 확인

k -n rook-ceph exec -it deploy/rook-ceph-tools -- ceph version

ceph version 18.2.1 (7fe91d5d5842e04be3b4f514d6dd990c54b29c76) reef (stable)

# ceph 상태 확인

k -n rook-ceph exec -it deploy/rook-ceph-tools -- ceph -s

cluster:

id: e0949fd6-77ca-4572-bc62-bfe06d5127b8

health: HEALTH_WARN

11 pool(s) have no replicas configured

services:

mon: 1 daemons, quorum a (age 4m)

mgr: a(active, since 3m)

osd: 3 osds: 3 up (since 3m), 3 in (since 3m)

data:

volumes: 1/1 healthy

pools: 11 pools, 137 pgs

objects: 22 objects, 595 KiB

usage: 94 MiB used, 150 GiB / 150 GiB avail

pgs: 137 active+clean

io:

client: 1.4 KiB/s rd, 2 op/s rd, 0 op/s wr

ceph 명령어 활용 `HEALTH_WARN 11 pool(s) have no replicas configured ` 해당 메세지는 무시해도 된다.

# health 확인

k -n rook-ceph exec -it deploy/rook-ceph-tools -- ceph health detail

HEALTH_WARN 11 pool(s) have no replicas configured

[WRN] POOL_NO_REDUNDANCY: 11 pool(s) have no replicas configured

pool 'ceph-blockpool' has no replicas configured

pool '.mgr' has no replicas configured

pool 'ceph-objectstore.rgw.control' has no replicas configured

pool 'ceph-filesystem-metadata' has no replicas configured

pool 'ceph-filesystem-data0' has no replicas configured

pool 'ceph-objectstore.rgw.meta' has no replicas configured

pool 'ceph-objectstore.rgw.log' has no replicas configured

pool 'ceph-objectstore.rgw.buckets.index' has no replicas configured

pool 'ceph-objectstore.rgw.buckets.non-ec' has no replicas configured

pool 'ceph-objectstore.rgw.otp' has no replicas configured

pool '.rgw.root' has no replicas configured

# pg 상태 확인(정상)

k -n rook-ceph exec -it deploy/rook-ceph-tools -- ceph pg dump_stuck

ok

# pg 상태 확인(문제 있을시)

kubectl -n rook-ceph exec -it deploy/rook-ceph-tools -- ceph pg dump_stuck

PG_STAT STATE UP UP_PRIMARY ACTING ACTING_PRIMARY

12.0 undersized+peered [0] 0 [0] 0

11.0 creating+incomplete [1,2147483647,2147483647] 1 [1,2147483647,2147483647] 1

ok

# osd 상태 확인

k -n rook-ceph exec -it deploy/rook-ceph-tools -- ceph osd status

ID HOST USED AVAIL WR OPS WR DATA RD OPS RD DATA STATE

0 test-server-storage 34.6M 49.9G 0 0 0 0 exists,up

1 test-server-storage 38.6M 49.9G 0 0 2 106 exists,up

2 test-server-storage 34.6M 49.9G 0 0 0 0 exists,up

나머지 상태 확인한다.

# osd tree 확인

k -n rook-ceph exec -it deploy/rook-ceph-tools -- ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.14639 root default

-3 0.14639 host test-server-storage

0 ssd 0.04880 osd.0 up 1.00000 1.00000

1 ssd 0.04880 osd.1 up 1.00000 1.00000

2 ssd 0.04880 osd.2 up 1.00000 1.00000

# osed 상태 확인

k -n rook-ceph exec -it deploy/rook-ceph-tools -- ceph osd status

ID HOST USED AVAIL WR OPS WR DATA RD OPS RD DATA STATE

0 test-server-storage 29.7M 49.9G 0 0 0 0 exists,up

1 test-server-storage 29.8M 49.9G 0 0 2 106 exists,up

2 test-server-storage 38.3M 49.9G 0 0 0 0 exists,up

# osd pool 목록 확인

k -n rook-ceph exec -it deploy/rook-ceph-tools -- ceph osd lspools

1 ceph-blockpool

2 ceph-objectstore.rgw.control

3 ceph-filesystem-metadata

4 ceph-filesystem-data0

5 ceph-objectstore.rgw.meta

6 ceph-objectstore.rgw.log

7 ceph-objectstore.rgw.buckets.index

8 ceph-objectstore.rgw.buckets.non-ec

9 ceph-objectstore.rgw.otp

10 .rgw.root

11 ceph-objectstore.rgw.buckets.data

12 .mgr

# osd pool 세부 정보 확인

k -n rook-ceph exec -it deploy/rook-ceph-tools -- ceph osd pool get {pool-name} all

# 리소스 확인 object store은 안쓰고 있기 때문에 당연히 false이다.

k get cephblockpool -n rook-ceph

NAME PHASE

ceph-blockpool Ready

k get cephobjectstore -n rook-ceph

NAME PHASE

ceph-objectstore Failure

k get cephfilesystem -n rook-ceph

NAME ACTIVEMDS AGE PHASE

ceph-filesystem 1 65m Ready

k get cephfilesystemsubvolumegroups.ceph.rook.io -n rook-ceph

NAME PHASE

ceph-filesystem-csi Ready

간단하게 pod를 생성한다.

cat <<EOF > test-pod.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: ceph-rbd-pvc

spec:

accessModes:

- ReadWriteOnce

storageClassName: ceph-block

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: Pod

metadata:

name: pod-using-ceph-rbd

spec:

containers:

- name: my-container

image: nginx

volumeMounts:

- mountPath: "/var/lib/www/html"

name: mypd

volumes:

- name: mypd

persistentVolumeClaim:

claimName: ceph-rbd-pvc

EOF

확인한다. 작동이 잘되고 있다.

k get pv,po,pvc -n test

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE

persistentvolume/pvc-27e58696-061e-40f3-afb4-f7c992179ffe 1Gi RWO Delete Bound test/ceph-rbd-pvc ceph-block <unset> 25s

NAME READY STATUS RESTARTS AGE

pod/pod-using-ceph-rbd 1/1 Running 0 25s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

persistentvolumeclaim/ceph-rbd-pvc Bound pvc-27e58696-061e-40f3-afb4-f7c992179ffe 1Gi RWO ceph-block <unset> 25s

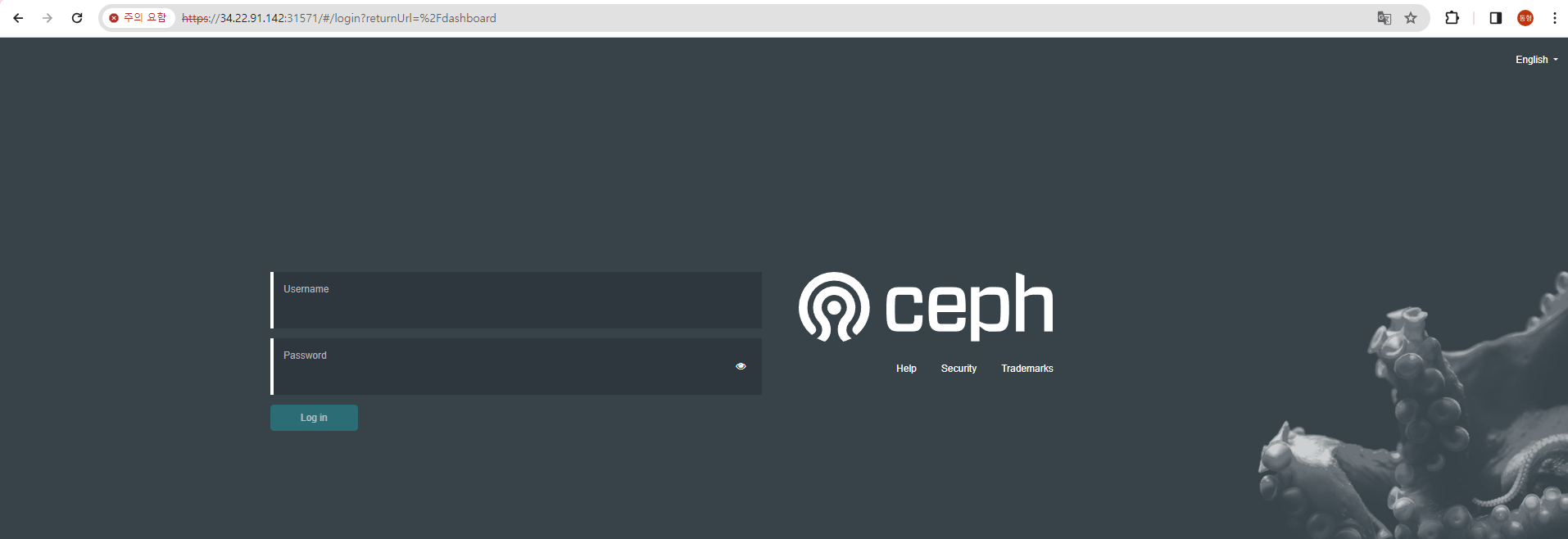

dashboard를 활용해본다.

cat <<EOF > rook-ceph-mgr-dashboard-external.yaml

apiVersion: v1

kind: Service

metadata:

name: rook-ceph-mgr-dashboard-external-https

namespace: rook-ceph

labels:

app: rook-ceph-mgr

rook_cluster: rook-ceph

spec:

ports:

- name: dashboard

port: 8443

protocol: TCP

targetPort: 8443

selector:

app: rook-ceph-mgr

rook_cluster: rook-ceph

mgr_role: active

sessionAffinity: None

type: NodePort

EOF

k apply -f rook-ceph-mgr-dashboard-external.yaml -n rook-ceph

k get svc -n rook-ceph

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

rook-ceph-exporter ClusterIP 10.233.55.70 <none> 9926/TCP 48m

rook-ceph-mgr ClusterIP 10.233.62.179 <none> 9283/TCP 47m

rook-ceph-mgr-dashboard ClusterIP 10.233.48.169 <none> 8443/TCP 47m

rook-ceph-mgr-dashboard-external-https NodePort 10.233.51.90 <none> 8443:31571/TCP 3s

rook-ceph-mon-a ClusterIP 10.233.7.147 <none> 6789/TCP,3300/TCP 48m

rook-ceph-rgw-ceph-objectstore ClusterIP 10.233.10.183 <none> 80/TCP 47m

방화벽을 허용해준다.

## Firewall ##

resource "google_compute_firewall" "nfs_server_ssh" {

name = "allow-ssh-nfs-server"

network = var.shared_vpc

allow {

protocol = "tcp"

ports = ["22", "31571"]

}

source_ranges = ["${var.public_ip}/32"]

target_tags = [var.kubernetes_server, var.kubernetes_client]

depends_on = [module.vpc]

}

대시보드 아이디와 비밀번호를 확인한다.

# 비밀번호 확인

kubectl -n rook-ceph get secret rook-ceph-dashboard-password -o jsonpath="{['data']['password']}" | base64 --decode && echo

.BzmuOklD<D)~T_@7,pr

비밀번호 재설정 방법은 아래와 같다.

cat <<EOF > password.txt

somaz@2024

EOF

k get po -n rook-ceph |grep tools

rook-ceph-tools-d99b58dc-4xjpr 1/1 Running 0 55m

k -n rook-ceph cp password.txt rook-ceph-tools-d99b58dc-4xjpr:/tmp/password.txt

k -n rook-ceph exec -it deploy/rook-ceph-tools -- ceph dashboard set-login-credentials admin -i /tmp/password.txt

******************************************************************

*** WARNING: this command is deprecated. ***

*** Please use the ac-user-* related commands to manage users. ***

******************************************************************

Username and password updated

이제 대시보드를 확인해본다.

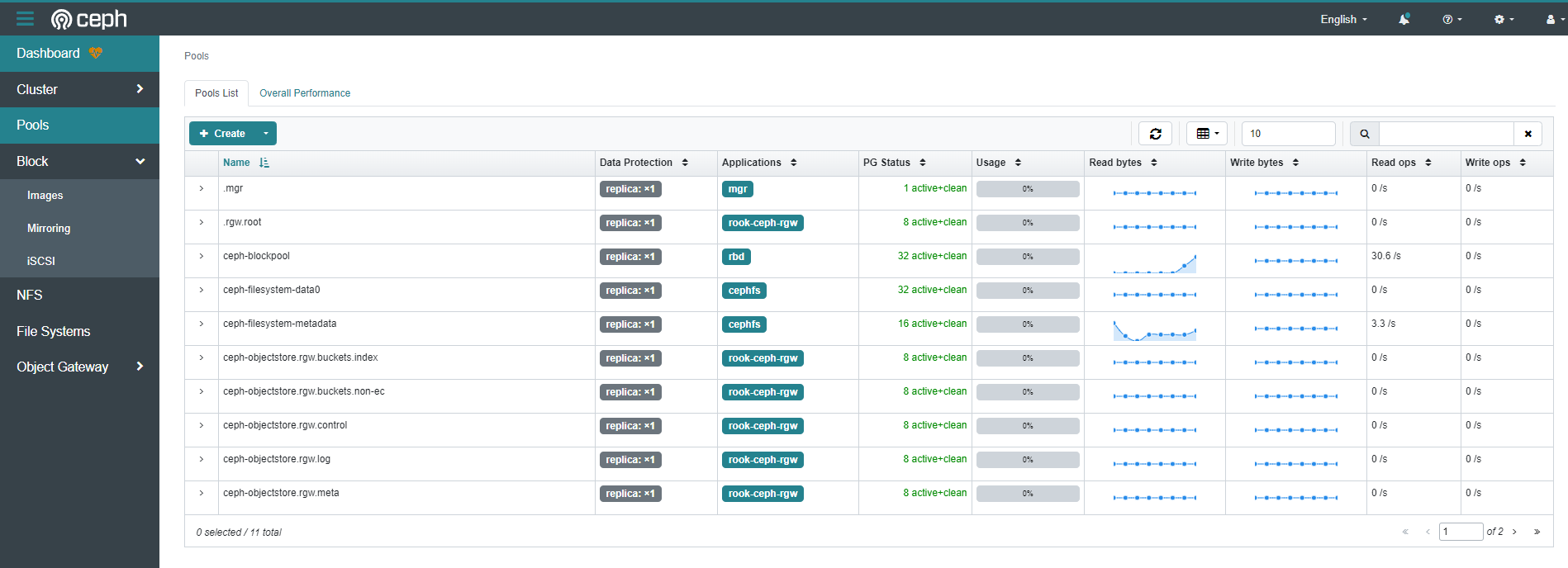

pool도 확인 가능하다.

Reference

https://cloud.google.com/compute/docs/general-purpose-machines?hl=ko#n2_machine_types

https://anthonyspiteri.net/rook-ceph-backed-object-storage-for-kubernetes-install-and-configure/

https://rook.io/docs/rook/latest-release/Getting-Started/storage-architecture/#architecture

'Open Source Software' 카테고리의 다른 글

| Habor Robot Account(하버 로봇 계정)란? (0) | 2024.08.21 |

|---|---|

| Cephadm-ansible이란? (3) | 2024.02.29 |

| Redis(Remote Dictionary Server)란? (0) | 2022.09.26 |

| Ceph 노드 제거 및 추가 방법(mon/mgr/osd) (0) | 2022.09.21 |

| RabbitMQ란? (0) | 2022.08.01 |