Overview: EKS 오토스케일링 완전 정복

CloudNet@ AEWS 스터디 5주차는 EKS Autoscaling 이다.

이번 CloudNet@ AEWS 스터디 5주차에서는 EKS 오토스케일링(Auto Scaling)을 주제로, Kubernetes 클러스터에서 다양한 오토스케일링 전략을 실습하고 이해하는 시간을 가졌다. 실습은 크게 다음과 같은 흐름으로 진행되었다.

- 실습 환경 배포 및 기본 설정

- EKS 클러스터를 CloudFormation으로 One-click 배포

- ExternalDNS, AWS Load Balancer Controller, kube-ops-view 등 환경 설정

- 모니터링 툴 설치

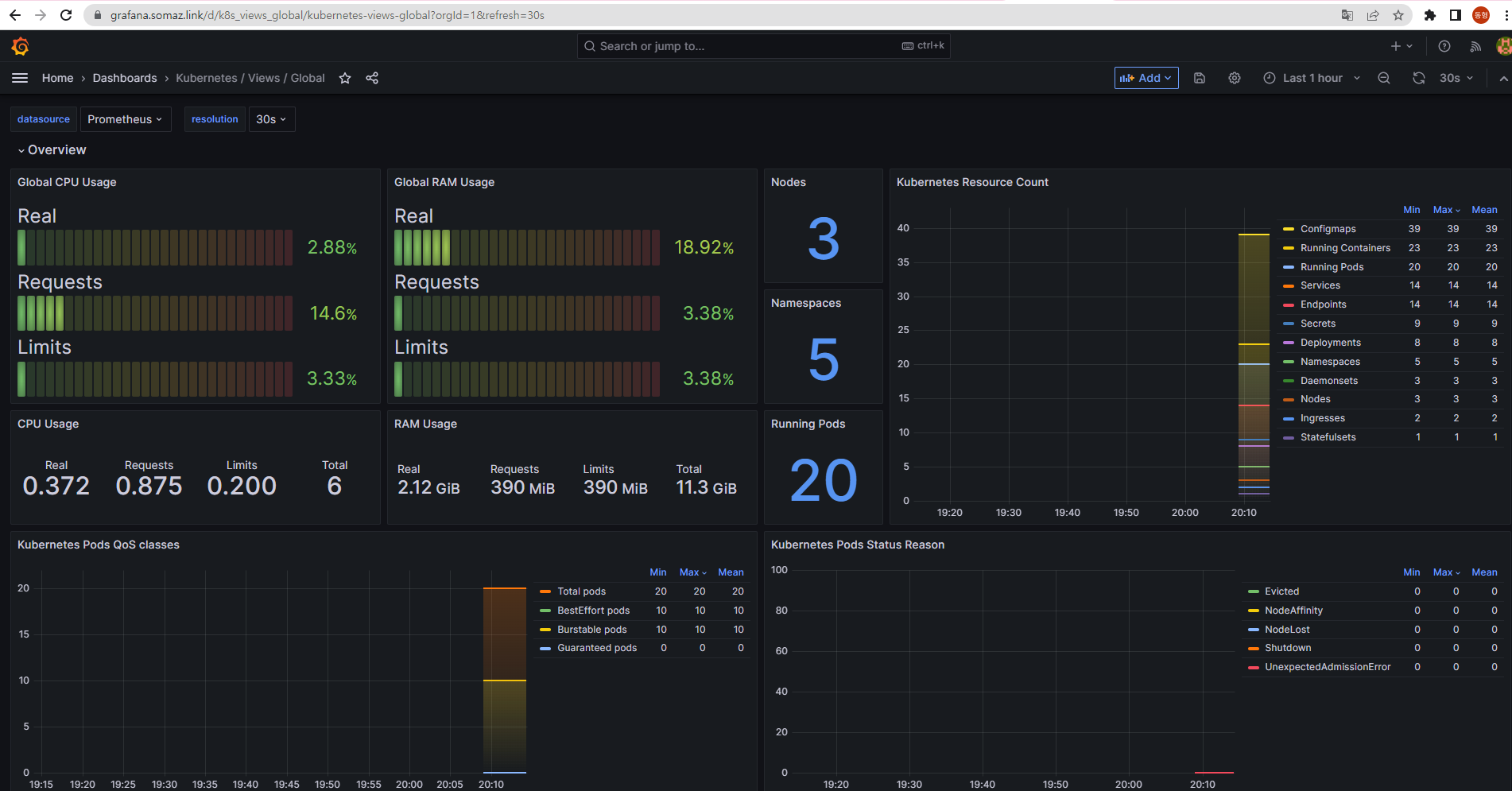

- Prometheus + Grafana로 클러스터 리소스와 오토스케일링 상태 시각화

- Metrics Server, eks-node-viewer 등도 함께 구성

- Autoscaler 종류별 실습

- HPA (Horizontal Pod Autoscaler)

: CPU 사용량 기반의 파드 수 조절 실습 - KEDA (Event Driven Autoscaler)

: 이벤트 기반 오토스케일링 (ex. cron, Kafka 등) 실습 - VPA (Vertical Pod Autoscaler)

: Pod 리소스 request 값을 동적으로 최적화 - CA (Cluster Autoscaler)

: Pending 파드를 기준으로 노드 수 자동 조정 - CPA (Cluster Proportional Autoscaler)

: 노드 수에 비례하여 특정 리소스를 자동 조절

- HPA (Horizontal Pod Autoscaler)

- Karpenter 실습

- “Node-less” 아키텍처를 구현하는 차세대 오토스케일러

- Spot/On-demand 인스턴스 자동 프로비저닝

- Consolidation(통합) 기능으로 리소스 최적화 및 비용 절감 실습

0. 실습 환경 배포

Amazon EKS 윈클릭 배포 (myeks) & 기본 설정

# YAML 파일 다운로드

curl -O https://s3.ap-northeast-2.amazonaws.com/cloudformation.cloudneta.net/K8S/eks-oneclick4.yaml

# CloudFormation 스택 배포

예시) aws cloudformation deploy --template-file eks-oneclick4.yaml --stack-name myeks --parameter-overrides KeyName=somaz-key SgIngressSshCidr=$(curl -s ipinfo.io/ip)/32 MyIamUserAccessKeyID=AKIA5... MyIamUserSecretAccessKey='CVNa2...' ClusterBaseName=myeks --region ap-northeast-2

# CloudFormation 스택 배포 완료 후 작업용 EC2 IP 출력

aws cloudformation describe-stacks --stack-name myeks --query 'Stacks[*].Outputs[0].OutputValue' --output text

# 작업용 EC2 SSH 접속

ssh -i ~/.ssh/somaz-key.pem ec2-user@$(aws cloudformation describe-stacks --stack-name myeks --query 'Stacks[*].Outputs[0].OutputValue' --output text)

기본 설정

# default 네임스페이스 적용

kubectl ns default

# (옵션) context 이름 변경

NICK=<각자 자신의 닉네임>

NICK=somaz

kubectl ctx

somaz@myeks.ap-northeast-2.eksctl.io

kubectl config rename-context admin@myeks.ap-northeast-2.eksctl.io $NICK@myeks

# ExternalDNS

MyDomain=<자신의 도메인>

echo "export MyDomain=<자신의 도메인>" >> /etc/profile

MyDomain=somaz.link

echo "export MyDomain=somaz.link" >> /etc/profile

MyDnzHostedZoneId=$(aws route53 list-hosted-zones-by-name --dns-name "${MyDomain}." --query "HostedZones[0].Id" --output text)

echo $MyDomain, $MyDnzHostedZoneId

somaz.link, /hostedzone/Z03204211VEUZG9O0RLE5

curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/aews/externaldns.yaml

MyDomain=$MyDomain MyDnzHostedZoneId=$MyDnzHostedZoneId envsubst < externaldns.yaml | kubectl apply -f -

# kube-ops-view

helm repo add geek-cookbook https://geek-cookbook.github.io/charts/

helm install kube-ops-view geek-cookbook/kube-ops-view --version 1.2.2 --set env.TZ="Asia/Seoul" --namespace kube-system

kubectl patch svc -n kube-system kube-ops-view -p '{"spec":{"type":"LoadBalancer"}}'

kubectl annotate service kube-ops-view -n kube-system "external-dns.alpha.kubernetes.io/hostname=kubeopsview.$MyDomain"

echo -e "Kube Ops View URL = http://kubeopsview.$MyDomain:8080/#scale=1.5"

Kube Ops View URL = http://kubeopsview.somaz.link:8080/#scale=1.5

# AWS LB Controller

helm repo add eks https://aws.github.io/eks-charts

helm repo update

helm install aws-load-balancer-controller eks/aws-load-balancer-controller -n kube-system --set clusterName=$CLUSTER_NAME \

--set serviceAccount.create=false --set serviceAccount.name=aws-load-balancer-controller

# 노드 보안그룹 ID 확인

NGSGID=$(aws ec2 describe-security-groups --filters Name=group-name,Values='*ng1*' --query "SecurityGroups[*].[GroupId]" --output text)

aws ec2 authorize-security-group-ingress --group-id $NGSGID --protocol '-1' --cidr 192.168.1.100/32

프로메테우스 & 그라파나(admin / prom-operator) 설치

- 대시보드 추천 15757 17900 15172

# 사용 리전의 인증서 ARN 확인

CERT_ARN=`aws acm list-certificates --query 'CertificateSummaryList[].CertificateArn[]' --output text`

echo $CERT_ARN

arn:aws:acm:ap-northeast-2:61184xxxxxxx:certificate/75exxxxx-5xxx-4xxx-8xxx-ab94xxxxxxx

# repo 추가

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

# 파라미터 파일 생성

cat <<EOT > monitor-values.yaml

prometheus:

prometheusSpec:

podMonitorSelectorNilUsesHelmValues: false

serviceMonitorSelectorNilUsesHelmValues: false

retention: 5d

retentionSize: "10GiB"

verticalPodAutoscaler:

enabled: true

ingress:

enabled: true

ingressClassName: alb

hosts:

- prometheus.$MyDomain

paths:

- /*

annotations:

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]'

alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN

alb.ingress.kubernetes.io/success-codes: 200-399

alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb

alb.ingress.kubernetes.io/group.name: study

alb.ingress.kubernetes.io/ssl-redirect: '443'

grafana:

defaultDashboardsTimezone: Asia/Seoul

adminPassword: prom-operator

ingress:

enabled: true

ingressClassName: alb

hosts:

- grafana.$MyDomain

paths:

- /*

annotations:

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]'

alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN

alb.ingress.kubernetes.io/success-codes: 200-399

alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb

alb.ingress.kubernetes.io/group.name: study

alb.ingress.kubernetes.io/ssl-redirect: '443'

defaultRules:

create: false

kubeControllerManager:

enabled: false

kubeEtcd:

enabled: false

kubeScheduler:

enabled: false

alertmanager:

enabled: false

EOT

# 배포

kubectl create ns monitoring

helm install kube-prometheus-stack prometheus-community/kube-prometheus-stack --version 45.27.2 \

--set prometheus.prometheusSpec.scrapeInterval='15s' --set prometheus.prometheusSpec.evaluationInterval='15s' \

-f monitor-values.yaml --namespace monitoring

(somaz@myeks:default) [root@myeks-bastion-EC2 ~]# k get po,svc,ingress -n monitoring

NAME READY STATUS RESTARTS AGE

pod/kube-prometheus-stack-grafana-68dfc58d45-4m2wq 3/3 Running 0 74s

pod/kube-prometheus-stack-kube-state-metrics-5d6578867c-bstv4 1/1 Running 0 74s

pod/kube-prometheus-stack-operator-74d474b47b-lhmqr 1/1 Running 0 74s

pod/kube-prometheus-stack-prometheus-node-exporter-nk4fb 1/1 Running 0 74s

pod/kube-prometheus-stack-prometheus-node-exporter-qhxrk 1/1 Running 0 74s

pod/kube-prometheus-stack-prometheus-node-exporter-swzpm 1/1 Running 0 74s

pod/prometheus-kube-prometheus-stack-prometheus-0 2/2 Running 0 68s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kube-prometheus-stack-grafana ClusterIP 10.100.191.31 <none> 80/TCP 74s

service/kube-prometheus-stack-kube-state-metrics ClusterIP 10.100.13.93 <none> 8080/TCP 74s

service/kube-prometheus-stack-operator ClusterIP 10.100.69.80 <none> 443/TCP 74s

service/kube-prometheus-stack-prometheus ClusterIP 10.100.170.54 <none> 9090/TCP 74s

service/kube-prometheus-stack-prometheus-node-exporter ClusterIP 10.100.212.210 <none> 9100/TCP 74s

service/prometheus-operated ClusterIP None <none> 9090/TCP 68s

NAME CLASS HOSTS

ADDRESS PORTS AGE

ingress.networking.k8s.io/kube-prometheus-stack-grafana alb grafana.somaz.link myeks-ingress-alb-1899028693.ap-northeast-2.elb.amazonaws.com 80 74s

ingress.networking.k8s.io/kube-prometheus-stack-prometheus alb prometheus.somaz.link myeks-ingress-alb-1899028693.ap-northeast-2.elb.amazonaws.com 80 74s

# Metrics-server 배포

kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

EKS Node Viewer 설치

노드 할당 가능 용량과 요청 request 리소스 표시, 실제 파드 리소스 사용량 X

# go 설치

yum install -y go

# EKS Node Viewer 설치 : 현재 ec2 spec에서는 설치에 다소 시간이 소요됨 = 2분 이상

go install github.com/awslabs/eks-node-viewer/cmd/eks-node-viewer@latest

# bin 확인 및 사용

tree ~/go/bin

/root/go/bin

└── eks-node-viewer

0 directories, 1 file

cd ~/go/bin

./eks-node-viewer

3 nodes (875m/5790m) 15.1% cpu ██████░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░ $0.156/hour | $113.820 pods (0 pending 20 running 20 bound)

ip-192-168-2-71.ap-northeast-2.compute.internal cpu ██░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░░ 6

ip-192-168-1-213.ap-northeast-2.compute.internal cpu ██████░░░░░░░░░░░░░░░░░░░░░░░░░░░░░ 17

ip-192-168-3-158.ap-northeast-2.compute.internal cpu ████████░░░░░░░░░░░░░░░░░░░░░░░░░░░ 22

Press any key to quit

명령 샘플

# Standard usage

./eks-node-viewer

# Display both CPU and Memory Usage

./eks-node-viewer --resources cpu,memory

# Karenter nodes only

./eks-node-viewer --node-selector "karpenter.sh/provisioner-name"

# Display extra labels, i.e. AZ

./eks-node-viewer --extra-labels topology.kubernetes.io/zone

# Specify a particular AWS profile and region

AWS_PROFILE=myprofile AWS_REGION=us-west-2

기본 옵션

# select only Karpenter managed nodes

node-selector=karpenter.sh/provisioner-name

# display both CPU and memory

resources=cpu,memory

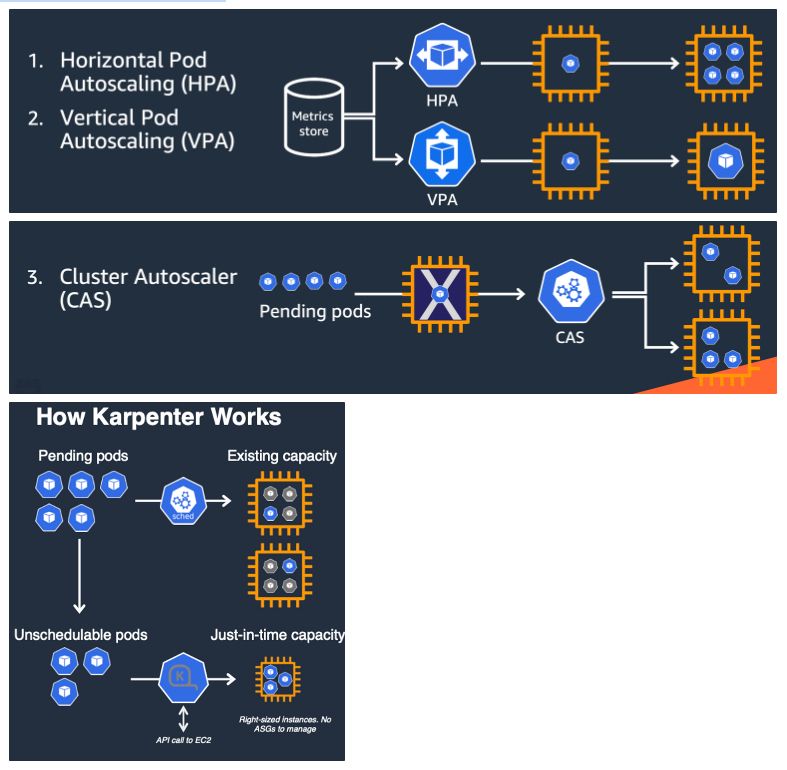

Kubernetes autoscaling overview

Auto Scaling 소개

- 출처 - (🧝🏻♂️)김태민 기술 블로그 - 링크

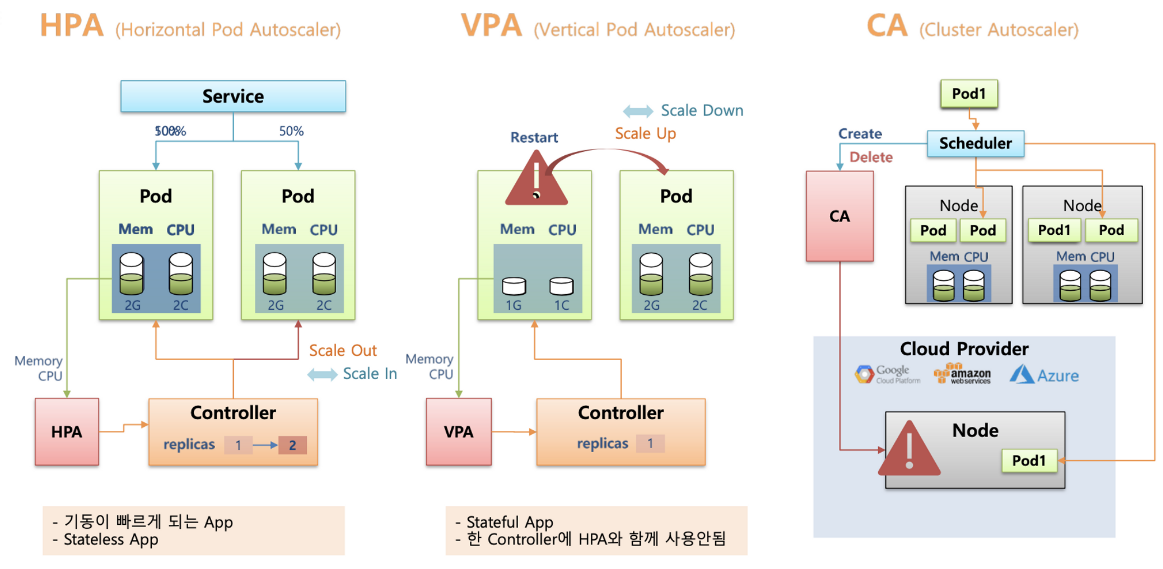

- K8S 오토스케일링 3가지 : HPA(Scale In/Out), VPA(Scale Up/Down), CA(노드 레벨)

HPA vs VPA vs CA

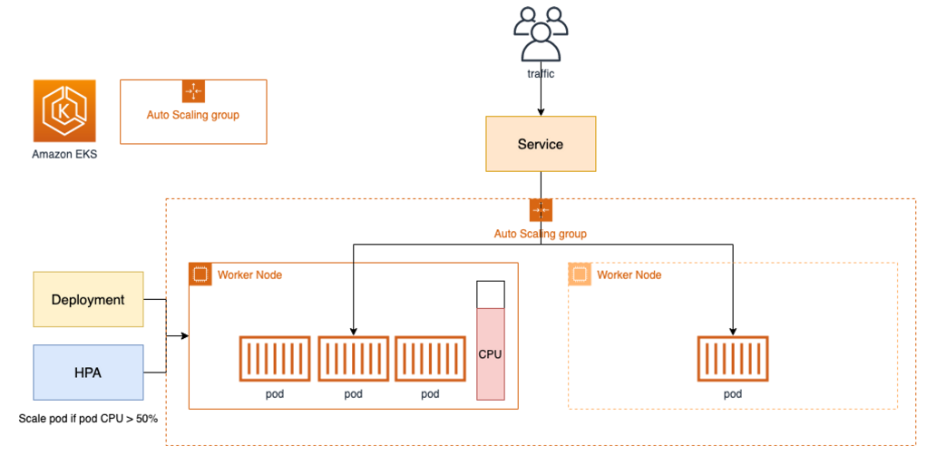

HPA 아키텍처

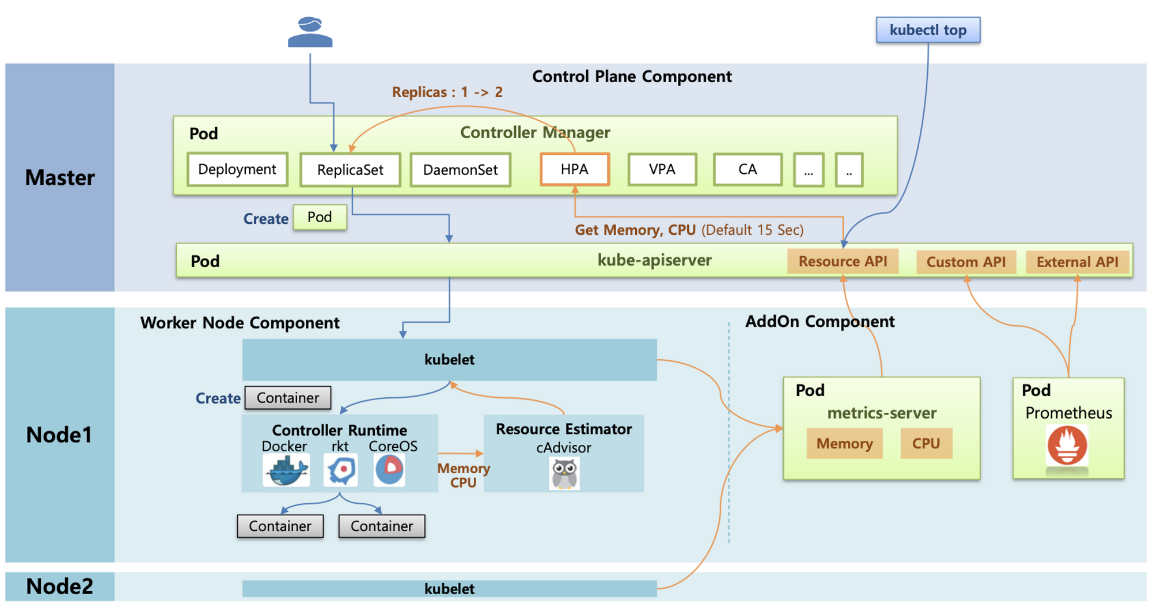

cAdvisor 이 컨테이너의 메모리/CPU 수집 → metrics-server 는 kubelet 를 통해서 수집 후 apiserver 에 등록

→ HPA는 apiserver(Resource API)를 통해서 15분 마다 메모리/CPU 수집하여 정책에 따라 동작

김태민님 기술 블로그

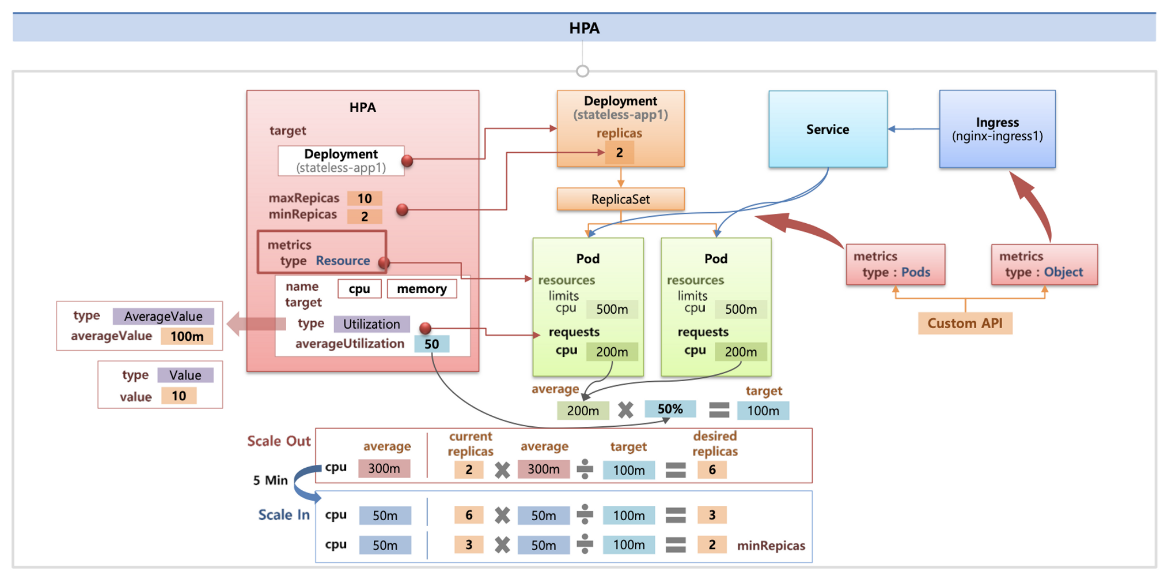

metrics.type(Resource, Pods, Object), target.type(Utilization, AverageValue, Value)

1. HPA - Horizontal Pod Autoscaler

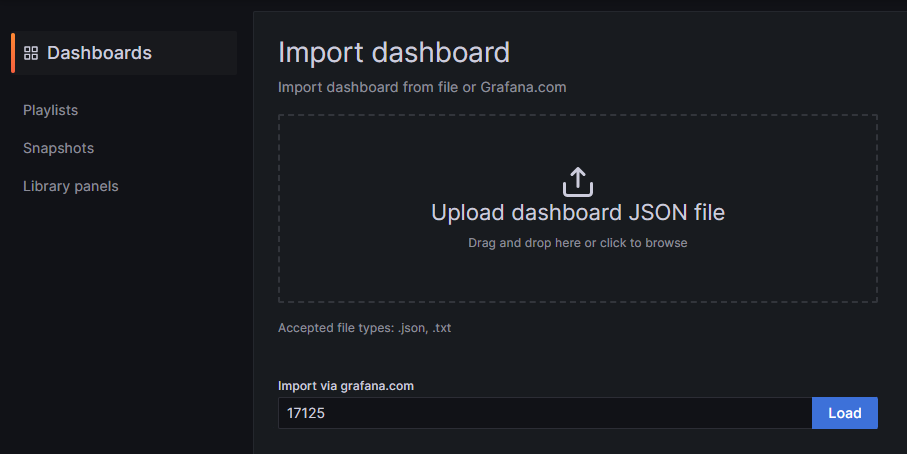

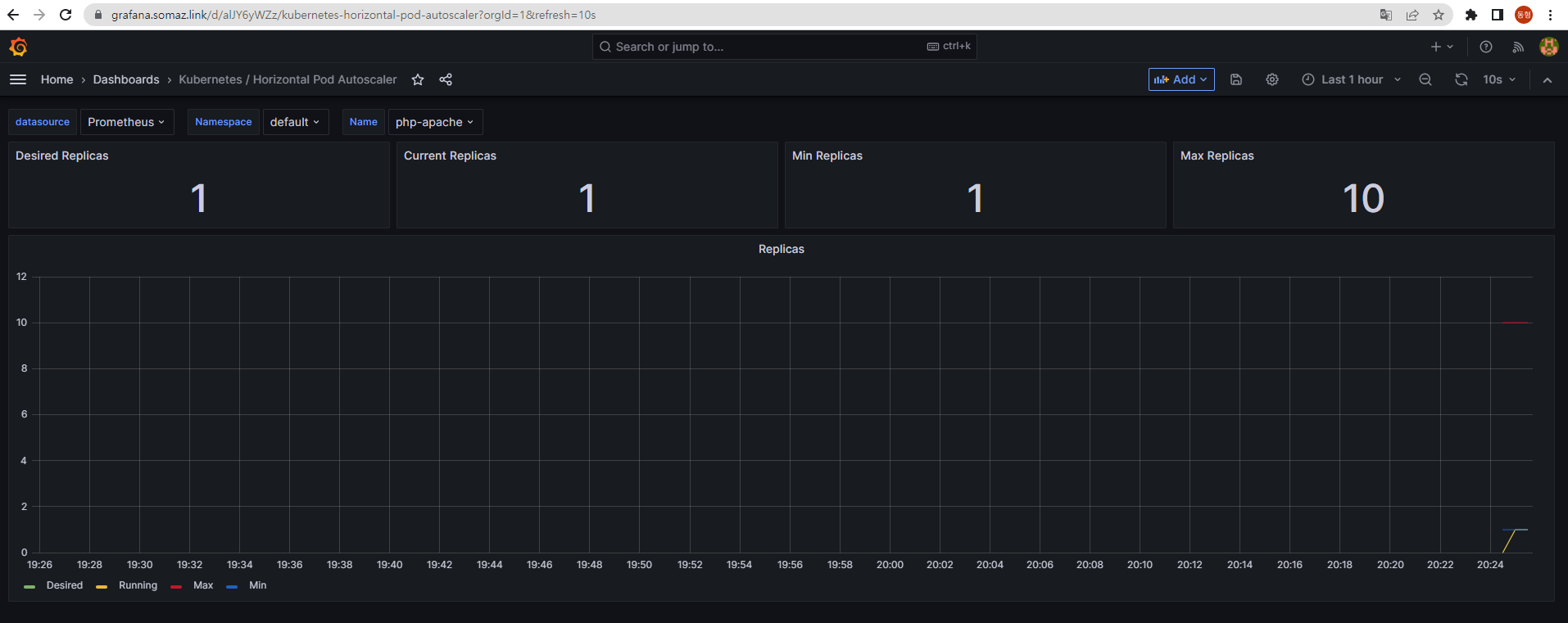

실습 : kube-ops-view 와 그라파나(17125)에서 모니터링 같이 해본다.

# Run and expose php-apache server

curl -s -O https://raw.githubusercontent.com/kubernetes/website/main/content/en/examples/application/php-apache.yaml

cat php-apache.yaml | yh

kubectl apply -f php-apache.yaml

# 확인

kubectl exec -it deploy/php-apache -- cat /var/www/html/index.php

...

<?php

$x = 0.0001;

for ($i = 0; $i <= 1000000; $i++) {

$x += sqrt($x);

}

echo "OK!";

?>

# 모니터링 : 터미널2개 사용

watch -d 'kubectl get hpa,pod;echo;kubectl top pod;echo;kubectl top node'

kubectl exec -it deploy/php-apache -- top

# 접속

PODIP=$(kubectl get pod -l run=php-apache -o jsonpath={.items[0].status.podIP})

curl -s $PODIP; echo

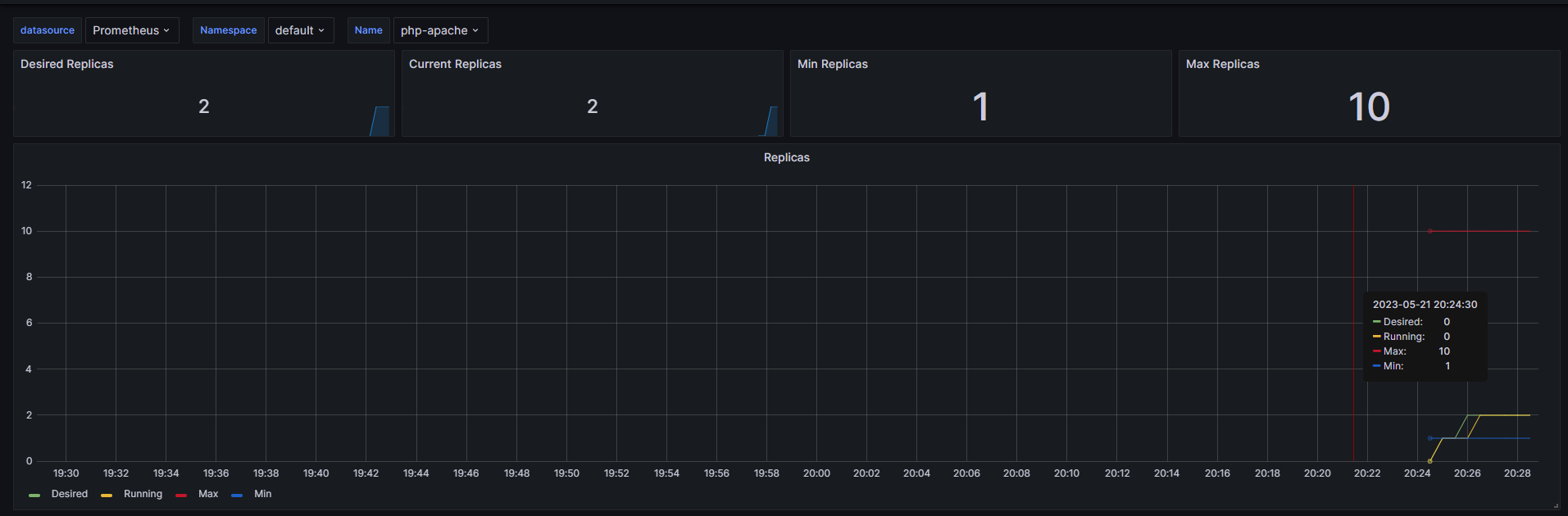

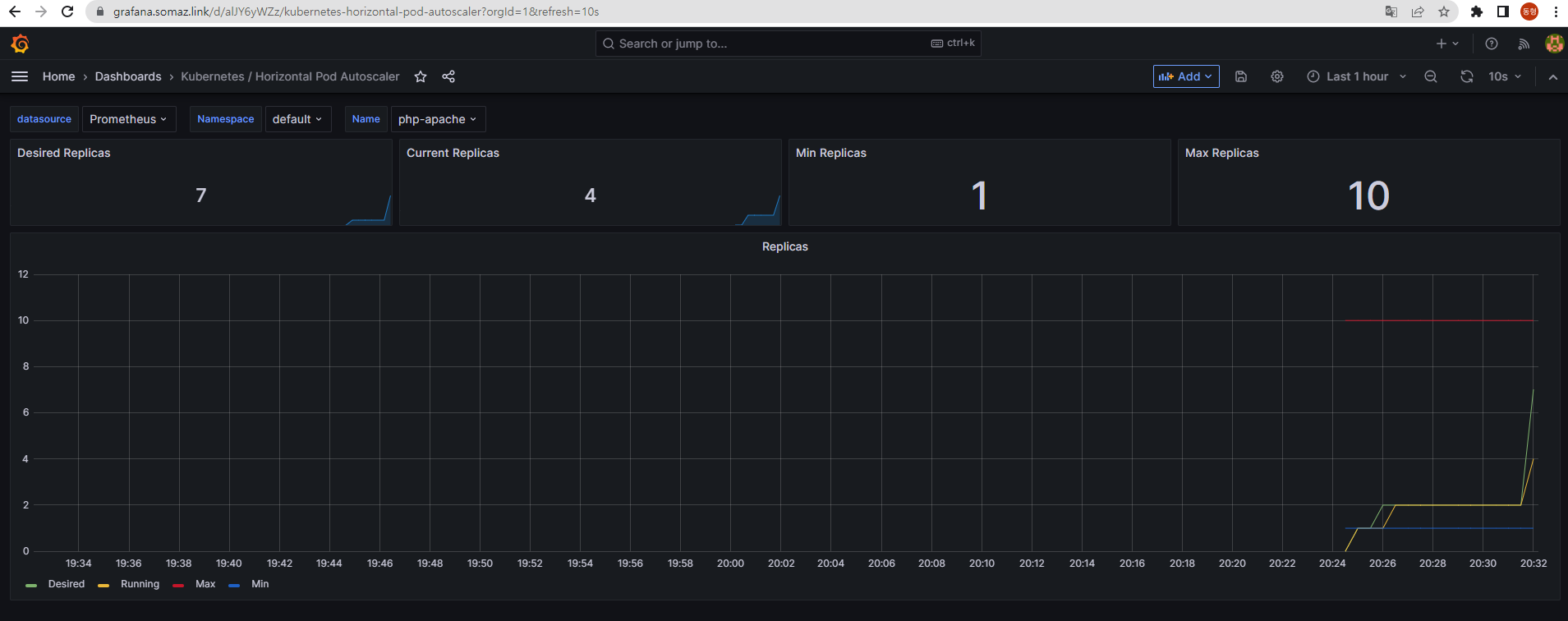

HPA 생성 및 부하 발생 후 오토 스케일링 테스트

증가 시 기본 대기 시간(30초), 감소 시 기본 대기 시간(5분) → 조정 가능

# Create the HorizontalPodAutoscaler : requests.cpu=200m - 알고리즘

# Since each pod requests 200 milli-cores by kubectl run, this means an average CPU usage of 100 milli-cores.

kubectl autoscale deployment php-apache --cpu-percent=50 --min=1 --max=10

kubectl describe hpa

...

Name: php-apache

Namespace: default

Labels: <none>

Annotations: <none>

CreationTimestamp: Sun, 21 May 2023 20:24:17 +0900

Reference: Deployment/php-apache

Metrics: ( current / target )

resource cpu on pods (as a percentage of request): <unknown> / 50%

Min replicas: 1

Max replicas: 10

Deployment pods: 0 current / 0 desired

Events: <none>

...

# HPA 설정 확인

kubectl krew install neat

kubectl get hpa php-apache -o yaml

kubectl get hpa php-apache -o yaml | kubectl neat | yh

spec:

minReplicas: 1 # [4] 또는 최소 1개까지 줄어들 수도 있습니다

maxReplicas: 10 # [3] 포드를 최대 5개까지 늘립니다

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: php-apache # [1] php-apache 의 자원 사용량에서

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 50 # [2] CPU 활용률이 50% 이상인 경우

# 반복 접속 1 (파드1 IP로 접속) >> 증가 확인 후 중지

while true;do curl -s $PODIP; sleep 0.5; done

OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!....

k get po

NAME READY STATUS RESTARTS AGE

php-apache-698db99f59-mrxtk 1/1 Running 0 3m39s

php-apache-698db99f59-mwstd 1/1 Running 0 10m

# 반복 접속 2 (서비스명 도메인으로 접속) >> 증가 확인(몇개까지 증가되는가? 그 이유는?) 후 중지 >> 중지 5분 후 파드 갯수 감소 확인

# Run this in a separate terminal

# so that the load generation continues and you can carry on with the rest of the steps

kubectl run -i --tty load-generator --rm --image=busybox:1.28 --restart=Never -- /bin/sh -c "while sleep 0.01; do wget -q -O- http://php-apache; done"

OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK!OK...

오브젝트 삭제

kubectl delete deploy,svc,hpa,pod --all

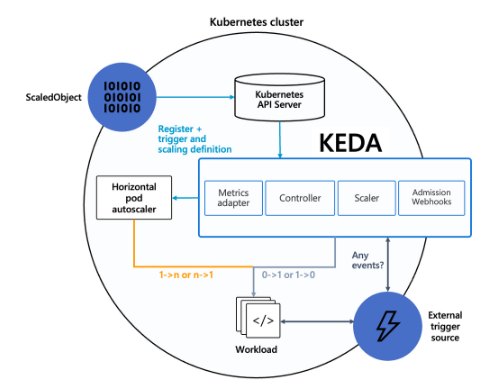

2. KEDA - Kubernetes based Event Driven Autoscaler

KEDA AutoScaler 소개

기존의 HPA(Horizontal Pod Autoscaler)는 리소스(CPU, Memory) 메트릭을 기반으로 스케일 여부를 결정하게 된다.

반면에 KEDA는 특정 이벤트를 기반으로 스케일 여부를 결정할 수 있다.

예를 들어 airflow는 metadb를 통해 현재 실행 중이거나 대기 중인 task가 얼마나 존재하는지 알 수 있다.

이러한 이벤트를 활용하여 worker의 scale을 결정한다면 queue에 task가 많이 추가되는 시점에 더 빠르게 확장할 수 있다.

KEDA Scalers : kafka trigger for an Apache Kafka topic

triggers:

- type: kafka

metadata:

bootstrapServers: kafka.svc:9092 # Comma separated list of Kafka brokers “hostname:port” to connect to for bootstrap.

consumerGroup: my-group # Name of the consumer group used for checking the offset on the topic and processing the related lag.

topic: test-topic # Name of the topic on which processing the offset lag. (Optional, see note below)

lagThreshold: '5' # Average target value to trigger scaling actions. (Default: 5, Optional)

offsetResetPolicy: latest # The offset reset policy for the consumer. (Values: latest, earliest, Default: latest, Optional)

allowIdleConsumers: false # When set to true, the number of replicas can exceed the number of partitions on a topic, allowing for idle consumers. (Default: false, Optional)

scaleToZeroOnInvalidOffset: false

version: 1.0.0 # Version of your Kafka brokers. See samara version (Default: 1.0.0, Optional)

KEDA with Helm : 특정 이벤트(cron 등)기반의 파드 오토 스케일링

# KEDA 설치

cat <<EOT > keda-values.yaml

metricsServer:

useHostNetwork: true

prometheus:

metricServer:

enabled: true

port: 9022

portName: metrics

path: /metrics

serviceMonitor:

# Enables ServiceMonitor creation for the Prometheus Operator

enabled: true

podMonitor:

# Enables PodMonitor creation for the Prometheus Operator

enabled: true

operator:

enabled: true

port: 8080

serviceMonitor:

# Enables ServiceMonitor creation for the Prometheus Operator

enabled: true

podMonitor:

# Enables PodMonitor creation for the Prometheus Operator

enabled: true

webhooks:

enabled: true

port: 8080

serviceMonitor:

# Enables ServiceMonitor creation for the Prometheus webhooks

enabled: true

EOT

kubectl create namespace keda

helm repo add kedacore https://kedacore.github.io/charts

helm install keda kedacore/keda --version 2.10.2 --namespace keda -f keda-values.yaml

# KEDA 설치 확인

kubectl get-all -n keda

...

NAME NAMESPACE AGE

configmap/kube-root-ca.crt keda 15s

endpoints/keda-admission-webhooks keda 11s

endpoints/keda-operator keda 11s

endpoints/keda-operator-metrics-apiserver keda 11s

pod/keda-admission-webhooks-68cf687cbf-cxpb4 keda 11s

pod/keda-operator-656478d687-nf5zs keda 11s

pod/keda-operator-metrics-apiserver-7fd585f657-k7m7f keda 11s

kubectl get crd | grep keda

clustertriggerauthentications.keda.sh 2023-05-21T11:38:04Z

scaledjobs.keda.sh 2023-05-21T11:38:04Z

scaledobjects.keda.sh 2023-05-21T11:38:04Z

triggerauthentications.keda.sh 2023-05-21T11:38:04Z

# keda 네임스페이스에 디플로이먼트 생성

kubectl apply -f php-apache.yaml -n keda

kubectl get pod -n keda

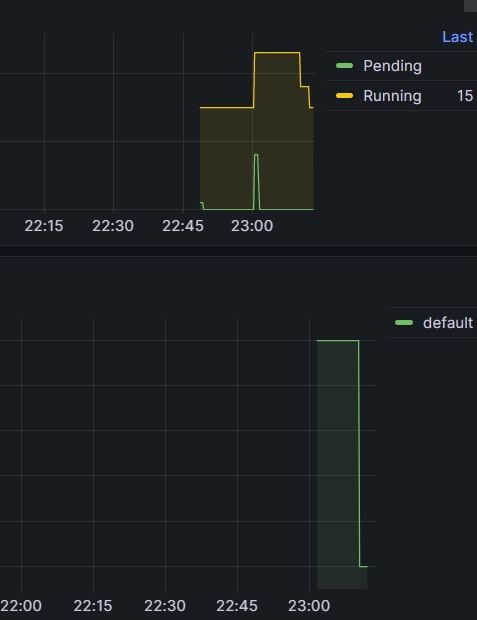

# ScaledObject 정책 생성 : cron

cat <<EOT > keda-cron.yaml

apiVersion: keda.sh/v1alpha1

kind: ScaledObject

metadata:

name: php-apache-cron-scaled

spec:

minReplicaCount: 0

maxReplicaCount: 2

pollingInterval: 30

cooldownPeriod: 300

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: php-apache

triggers:

- type: cron

metadata:

timezone: Asia/Seoul

start: 00,15,30,45 * * * *

end: 05,20,35,50 * * * *

desiredReplicas: "1"

EOT

kubectl apply -f keda-cron.yaml -n keda

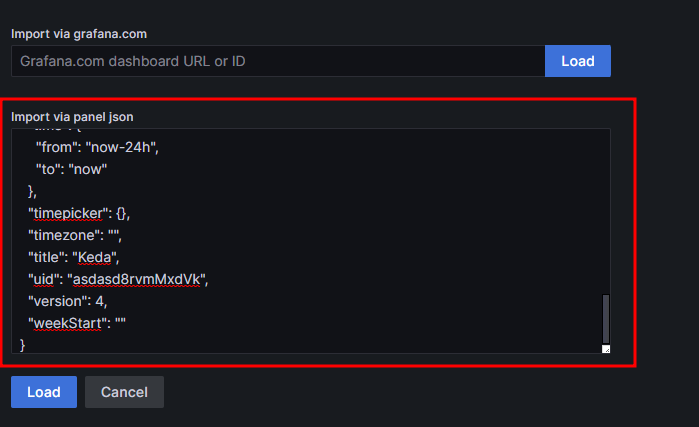

# 그라파나 대시보드 추가

# 모니터링

watch -d 'kubectl get ScaledObject,hpa,pod -n keda'

kubectl get ScaledObject -w

# 확인

kubectl get ScaledObject,hpa,pod -n keda

kubectl get hpa -o jsonpath={.items[0].spec} -n keda | jq

...

"metrics": [

{

"external": {

"metric": {

"name": "s0-cron-Asia-Seoul-00,15,30,45xxxx-05,20,35,50xxxx",

"selector": {

"matchLabels": {

"scaledobject.keda.sh/name": "php-apache-cron-scaled"

}

}

},

"target": {

"averageValue": "1",

"type": "AverageValue"

}

},

"type": "External"

}

# KEDA 및 deployment 등 삭제

kubectl delete -f keda-cron.yaml -n keda && kubectl delete deploy php-apache -n keda && helm uninstall keda -n keda

kubectl delete namespace keda

3. VPA - Vertical Pod Autoscaler

VPA

pod resources.request을 최대한 최적값으로 수정, HPA와 같이 사용 불가능, 수정 시 파드 재실행

악분님 포스팅 내용

https://malwareanalysis.tistory.com/603

EKS 스터디 - 5주차 1편 - VPA

VPA란? VPA(Vertical Pod Autoscaler)는 pod resources.request을 최대한 최적값으로 수정합니다. 수정된 request값이 기존 값보다 위 또는 아래 범위에 속하므로 Vertical라고 표현합니다. pod마다 resource.request를 최

malwareanalysis.tistory.com

# 코드 다운로드

git clone https://github.com/kubernetes/autoscaler.git

cd ~/autoscaler/vertical-pod-autoscaler/

tree hack

# 배포 과정에서 에러 발생 : 방안1 openssl 버전 1.1.1 up, 방안2 브랜치08에서 작업

ERROR: Failed to create CA certificate for self-signing. If the error is "unknown option -addext", update your openssl version or deploy VPA from the vpa-release-0.8 branch.

# 프로메테우스 임시 파일 시스템 사용으로 재시작 시 저장 메트릭과 대시보드 정보가 다 삭제되어서 스터디 시간 실습 시나리오는 비추천

helm upgrade kube-prometheus-stack prometheus-community/kube-prometheus-stack --reuse-values --set prometheusOperator.verticalPodAutoscaler.enabled=true -n monitoring

# openssl 버전 확인

openssl version

OpenSSL 1.0.2k-fips 26 Jan 2017

# openssl 1.1.1 이상 버전 확인

yum install openssl11 -y

openssl11 version

OpenSSL 1.1.1g FIPS 21 Apr 2020

# 스크립트파일내에 openssl11 수정

sed -i 's/openssl/openssl11/g' ~/autoscaler/vertical-pod-autoscaler/pkg/admission-controller/gencerts.sh

# Deploy the Vertical Pod Autoscaler to your cluster with the following command.

watch -d kubectl get pod -n kube-system

cat hack/vpa-up.sh

./hack/vpa-up.sh

kubectl get crd | grep autoscaling

그라파나 대시보드

공식 예제

pod가 실행되면 약 2~3분 뒤에 pod resource.reqeust가 VPA에 의해 수정

# 모니터링

watch -d kubectl top pod

# 공식 예제 배포

cd ~/autoscaler/vertical-pod-autoscaler/

cat examples/hamster.yaml | yh

kubectl apply -f examples/hamster.yaml && kubectl get vpa -w

# 파드 리소스 Requestes 확인

kubectl describe pod | grep Requests: -A2

Requests:

cpu: 100m

memory: 50Mi

--

Requests:

cpu: 587m

memory: 262144k

--

Requests:

cpu: 587m

memory: 262144k

# VPA에 의해 기존 파드 삭제되고 신규 파드가 생성됨

kubectl get events --sort-by=".metadata.creationTimestamp" | grep VPA

2m16s Normal EvictedByVPA pod/hamster-5bccbb88c6-s6jkp Pod was evicted by VPA Updater to apply resource recommendation.

76s Normal EvictedByVPA pod/hamster-5bccbb88c6-jc6gq Pod was evicted by VPA Updater to apply resource recommendation.

# 리소스 삭제

kubectl delete -f examples/hamster.yaml && cd ~/autoscaler/vertical-pod-autoscaler/ && ./hack/vpa-down.sh

4. CA - Cluster Autoscaler

구성소개

- Cluster Autoscale 동작을 하기 위한 cluster-autoscaler 파드(디플로이먼트)를 배치한다.

- Cluster Autoscaler(CA)는 pending 상태인 파드가 존재할 경우, 워커 노드를 스케일 아웃한다.

- 특정 시간을 간격으로 사용률을 확인하여 스케일 인/아웃을 수행한다. 그리고 AWS에서는 Auto Scaling Group(ASG)을 사용하여 Cluster Autoscaler를 적용한다.

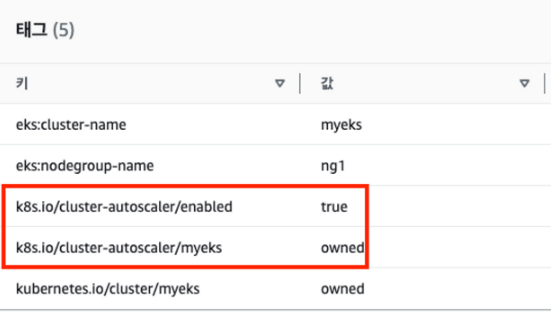

Cluster Autoscaler(CA) 설정

설정 전 확인

# EKS 노드에 이미 아래 tag가 들어가 있음

# k8s.io/cluster-autoscaler/enabled : true

# k8s.io/cluster-autoscaler/myeks : owned

aws ec2 describe-instances --filters Name=tag:Name,Values=$CLUSTER_NAME-ng1-Node --query "Reservations[*].Instances[*].Tags[*]" --output yaml | yh

...

- Key: k8s.io/cluster-autoscaler/myeks

Value: owned

- Key: k8s.io/cluster-autoscaler/enabled

Value: 'true'

...

# 현재 autoscaling(ASG) 정보 확인

# aws autoscaling describe-auto-scaling-groups --query "AutoScalingGroups[? Tags[? (Key=='eks:cluster-name') && Value=='클러스터이름']].[AutoScalingGroupName, MinSize, MaxSize,DesiredCapacity]" --output table

aws autoscaling describe-auto-scaling-groups \

--query "AutoScalingGroups[? Tags[? (Key=='eks:cluster-name') && Value=='myeks']].[AutoScalingGroupName, MinSize, MaxSize,DesiredCapacity]" \

--output table

-----------------------------------------------------------------

| DescribeAutoScalingGroups |

+------------------------------------------------+----+----+----+

| eks-ng1-44c41109-daa3-134c-df0e-0f28c823cb47 | 3 | 3 | 3 |

+------------------------------------------------+----+----+----+

# MaxSize 6개로 수정

export ASG_NAME=$(aws autoscaling describe-auto-scaling-groups --query "AutoScalingGroups[? Tags[? (Key=='eks:cluster-name') && Value=='myeks']].AutoScalingGroupName" --output text)

aws autoscaling update-auto-scaling-group --auto-scaling-group-name ${ASG_NAME} --min-size 3 --desired-capacity 3 --max-size 6

# 확인

aws autoscaling describe-auto-scaling-groups --query "AutoScalingGroups[? Tags[? (Key=='eks:cluster-name') && Value=='myeks']].[AutoScalingGroupName, MinSize, MaxSize,DesiredCapacity]" --output table

-----------------------------------------------------------------

| DescribeAutoScalingGroups |

+------------------------------------------------+----+----+----+

| eks-ng1-c2c41e26-6213-a429-9a58-02374389d5c3 | 3 | 6 | 3 |

+------------------------------------------------+----+----+----+

# 배포 : Deploy the Cluster Autoscaler (CA)

curl -s -O https://raw.githubusercontent.com/kubernetes/autoscaler/master/cluster-autoscaler/cloudprovider/aws/examples/cluster-autoscaler-autodiscover.yaml

sed -i "s/<YOUR CLUSTER NAME>/$CLUSTER_NAME/g" cluster-autoscaler-autodiscover.yaml

kubectl apply -f cluster-autoscaler-autodiscover.yaml

# 확인

kubectl get pod -n kube-system | grep cluster-autoscaler

kubectl describe deployments.apps -n kube-system cluster-autoscaler

# (옵션) cluster-autoscaler 파드가 동작하는 워커 노드가 퇴출(evict) 되지 않게 설정

kubectl -n kube-system annotate deployment.apps/cluster-autoscaler cluster-autoscaler.kubernetes.io/safe-to-evict="false"

SCALE A CLUSTER WITH Cluster Autoscaler(CA)

# 모니터링

kubectl get nodes -w

while true; do kubectl get node; echo "------------------------------" ; date ; sleep 1; done

while true; do aws ec2 describe-instances --query "Reservations[*].Instances[*].{PrivateIPAdd:PrivateIpAddress,InstanceName:Tags[?Key=='Name']|[0].Value,Status:State.Name}" --filters Name=instance-state-name,Values=running --output text ; echo "------------------------------"; date; sleep 1; done

# Deploy a Sample App

# We will deploy an sample nginx application as a ReplicaSet of 1 Pod

cat <<EoF> nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-to-scaleout

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

service: nginx

app: nginx

spec:

containers:

- image: nginx

name: nginx-to-scaleout

resources:

limits:

cpu: 500m

memory: 512Mi

requests:

cpu: 500m

memory: 512Mi

EoF

kubectl apply -f nginx.yaml

kubectl get deployment/nginx-to-scaleout

# Scale our ReplicaSet

# Let’s scale out the replicaset to 15

kubectl scale --replicas=15 deployment/nginx-to-scaleout && date

# 확인

kubectl get pods -l app=nginx -o wide --watch

kubectl -n kube-system logs -f deployment/cluster-autoscaler

# 노드 자동 증가 확인

kubectl get nodes

aws autoscaling describe-auto-scaling-groups \

--query "AutoScalingGroups[? Tags[? (Key=='eks:cluster-name') && Value=='myeks']].[AutoScalingGroupName, MinSize, MaxSize,DesiredCapacity]" \

--output table

./eks-node-viewer

42 pods (0 pending 42 running 42 bound)

ip-192-168-3-196.ap-northeast-2.compute.internal cpu ███████████████████████████████████ 100% (10 pods) t3.medium/$0.0520 On-Demand

ip-192-168-1-91.ap-northeast-2.compute.internal cpu ███████████████████████████████░░░░ 89% (9 pods) t3.medium/$0.0520 On-Demand

ip-192-168-2-185.ap-northeast-2.compute.internal cpu █████████████████████████████████░░ 95% (11 pods) t3.medium/$0.0520 On-Demand

ip-192-168-2-87.ap-northeast-2.compute.internal cpu █████████████████████████████░░░░░░ 84% (6 pods) t3.medium/$0.0520 On-Demand

ip-192-168-3-15.ap-northeast-2.compute.internal cpu █████████████████████████████░░░░░░ 84% (6 pods) t3.medium/$0.0520 On-Demand

# 디플로이먼트 삭제

kubectl delete -f nginx.yaml && date

# 노드 갯수 축소 : 기본은 10분 후 scale down 됨, 물론 아래 flag 로 시간 수정 가능 >> 그러니 디플로이먼트 삭제 후 10분 기다리고 나서 보자!

# By default, cluster autoscaler will wait 10 minutes between scale down operations,

# you can adjust this using the --scale-down-delay-after-add, --scale-down-delay-after-delete,

# and --scale-down-delay-after-failure flag.

# E.g. --scale-down-delay-after-add=5m to decrease the scale down delay to 5 minutes after a node has been added.

# 터미널1

watch -d kubectl get node

리소스 삭제

위 실습 중 디플로이먼트 삭제 후 10분 후 노드 갯수 축소되는 것을 확인 후 아래 삭제를 해보자! >> 만약 바로 아래 CA 삭제 시 워커 노드는 4개 상태가 되어서 수동으로 2대 변경 하자!

kubectl delete -f nginx.yaml

# size 수정

aws autoscaling update-auto-scaling-group --auto-scaling-group-name ${ASG_NAME} --min-size 3 --desired-capacity 3 --max-size 3

aws autoscaling describe-auto-scaling-groups --query "AutoScalingGroups[? Tags[? (Key=='eks:cluster-name') && Value=='myeks']].[AutoScalingGroupName, MinSize, MaxSize,DesiredCapacity]" --output table

# Cluster Autoscaler 삭제

kubectl delete -f cluster-autoscaler-autodiscover.yaml

CA 문제점

하나의 자원에 대해 두군데 (AWS ASG vs AWS EKS)에서 각자의 방식으로 관리

⇒ 관리 정보가 서로 동기화되지 않아 다양한 문제 발생

- CA 문제점 : ASG에만 의존하고 노드 생성/삭제 등에 직접 관여 안함

- EKS에서 노드를 삭제 해도 인스턴스는 삭제 안됨

- 노드 축소 될 때 특정 노드가 축소 되도록 하기 매우 어려움 : pod이 적은 노드 먼저 축소, 이미 드레인 된 노드 먼저 축소

- 특정 노드를 삭제 하면서 동시에 노드 개수를 줄이기 어려움 : 줄일때 삭제 정책 옵션이 다양하지 않음

- 정책 미지원 시 삭제 방식(예시) : 100대 중 미삭제 EC2 보호 설정 후 삭제 될 ec2의 파드를 이주 후 scaling 조절로 삭제 후 원복

- 특정 노드를 삭제하면서 동시에 노드 개수를 줄이기 어려움

- 폴링 방식이기에 너무 자주 확장 여유를 확인 하면 API 제한에 도달할 수 있음

- 스케일링 속도가 매우 느림

- Cluster Autoscaler 는 쿠버네티스 클러스터 자체의 오토 스케일링을 의미하며, 수요에 따라 워커 노드를 자동으로 추가하는 기능

- 언뜻 보기에 클러스터 전체나 각 노드의 부하 평균이 높아졌을 때 확장으로 보인다 → 함정! 🚧

- Pending 상태의 파드가 생기는 타이밍에 처음으로 Cluster Autoscaler 이 동작한다

- 즉, Request 와 Limits 를 적절하게 설정하지 않은 상태에서는 실제 노드의 부하 평균이 낮은 상황에서도 스케일 아웃이 되거나, 부하 평균이 높은 상황임에도 스케일 아웃이 되지 않는다!

- 기본적으로 리소스에 의한 스케줄링은 Requests(최소)를 기준으로 이루어진다. 다시 말해 Requests 를 초과하여 할당한 경우에는 최소 리소스 요청만으로 리소스가 꽉 차 버려서 신규 노드를 추가해야만 한다. 이때 실제 컨테이너 프로세스가 사용하는 리소스 사용량은 고려되지 않는다.

- 반대로 Request 를 낮게 설정한 상태에서 Limit 차이가 나는 상황을 생각해보자. 각 컨테이너는 Limits 로 할당된 리소스를 최대로 사용한다. 그래서 실제 리소스 사용량이 높아졌더라도 Requests 합계로 보면 아직 스케줄링이 가능하기 때문에 클러스터가 스케일 아웃하지 않는 상황이 발생한다.

- 여기서는 CPU 리소스 할당을 예로 설명했지만 메모리의 경우도 마찬가지다.

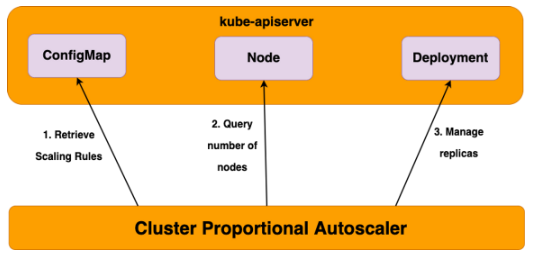

5. CPA - Cluster Proportional Autoscaler

소개

노드 수 증가에 비례하여 성능 처리가 필요한 애플리케이션(컨테이너/파드)를 수평으로 자동 확장

#

helm repo add cluster-proportional-autoscaler https://kubernetes-sigs.github.io/cluster-proportional-autoscaler

# CPA규칙을 설정하고 helm차트를 릴리즈 필요

helm upgrade --install cluster-proportional-autoscaler cluster-proportional-autoscaler/cluster-proportional-autoscaler

# nginx 디플로이먼트 배포

cat <<EOT > cpa-nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

resources:

limits:

cpu: "100m"

memory: "64Mi"

requests:

cpu: "100m"

memory: "64Mi"

ports:

- containerPort: 80

EOT

kubectl apply -f cpa-nginx.yaml

# CPA 규칙 설정

cat <<EOF > cpa-values.yaml

config:

ladder:

nodesToReplicas:

- [1, 1]

- [2, 2]

- [3, 3]

- [4, 3]

- [5, 5]

options:

namespace: default

target: "deployment/nginx-deployment"

EOF

# 모니터링

watch -d kubectl get pod

# helm 업그레이드

helm upgrade --install cluster-proportional-autoscaler -f cpa-values.yaml cluster-proportional-autoscaler/cluster-proportional-autoscaler

# 노드 5개로 증가

export ASG_NAME=$(aws autoscaling describe-auto-scaling-groups --query "AutoScalingGroups[? Tags[? (Key=='eks:cluster-name') && Value=='myeks']].AutoScalingGroupName" --output text)

aws autoscaling update-auto-scaling-group --auto-scaling-group-name ${ASG_NAME} --min-size 5 --desired-capacity 5 --max-size 5

aws autoscaling describe-auto-scaling-groups --query "AutoScalingGroups[? Tags[? (Key=='eks:cluster-name') && Value=='myeks']].[AutoScalingGroupName, MinSize, MaxSize,DesiredCapacity]" --output table

# 노드 4개로 축소

aws autoscaling update-auto-scaling-group --auto-scaling-group-name ${ASG_NAME} --min-size 4 --desired-capacity 4 --max-size 4

aws autoscaling describe-auto-scaling-groups --query "AutoScalingGroups[? Tags[? (Key=='eks:cluster-name') && Value=='myeks']].[AutoScalingGroupName, MinSize, MaxSize,DesiredCapacity]" --output table

# 리소스 삭제

helm uninstall cluster-proportional-autoscaler && kubectl delete -f cpa-nginx.yaml

Karpenter 실습 환경 준비를 위해서 현재 EKS 실습 환경 전부 삭제

Helm Chart 삭제

helm uninstall -n kube-system kube-ops-view

helm uninstall -n monitoring kube-prometheus-stack

삭제

eksctl delete cluster --name $CLUSTER_NAME && aws cloudformation delete-stack --stack-name $CLUSTER_NAME- ALB(Ingress)가 잘 삭제가 되지 않을 경우 수동으로 ALB와 TG를 삭제하고, 이후 VPC를 직접 삭제해주자 → 이후 다시 CloudFormation 스택을 삭제하면 됨

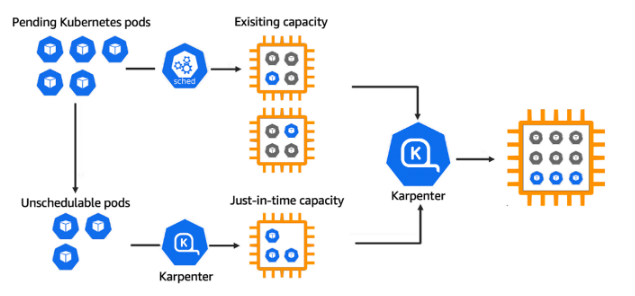

6. Karpenter : K8S Native AutoScaler & Fargate

linuxer 정태환님이 EKS Nodeless 컨셉을 정리해주셨다

⇒ Fargate + Karpenter - 링크

How to create nodeless AWS EKS clusters with Karpenter for autoscaling

Karpenter is an exciting Kubernetes autoscaler that can be used to provision “nodeless” AWS EKS clusters.

verifa.io

출처 : linuxer 정태환님

https://linuxer.name/?s=nodeless

“nodeless” 검색결과 - 리눅서의 기술술 블로그

이제야 드디어 Karpenter까지 왔다. Karpenter의 구성요소부터 살펴보자! PodDisruptionBudget: PodDisruptionBudget은 클러스터의 안정성을 보장하기 위해 사용된다. 특정 서비스를 중단하지 않고 동시에 종료할

linuxer.name

소개

노드 수명 주기 관리 솔루션, 몇 초 만에 컴퓨팅 리소스 제공

Getting Started with Karpenter 실습

복잡도를 줄이기 위해서 신규 EKS(myeks2) 환경에서 실습을 진행한다.

실습 환경 배포(2분 후 접속)

# YAML 파일 다운로드

curl -O https://s3.ap-northeast-2.amazonaws.com/cloudformation.cloudneta.net/K8S/karpenter-preconfig.yaml

# CloudFormation 스택 배포

예시) aws cloudformation deploy --template-file karpenter-preconfig.yaml --stack-name myeks2 --parameter-overrides KeyName=somaz-key SgIngressSshCidr=$(curl -s ipinfo.io/ip)/32 MyIamUserAccessKeyID=AKIA5... MyIamUserSecretAccessKey='CVNa2...' ClusterBaseName=myeks2 --region ap-northeast-2

# CloudFormation 스택 배포 완료 후 작업용 EC2 IP 출력

aws cloudformation describe-stacks --stack-name myeks2 --query 'Stacks[*].Outputs[0].OutputValue' --output text

# 작업용 EC2 SSH 접속

ssh -i ~/.ssh/somaz-key.pem ec2-user@$(aws cloudformation describe-stacks --stack-name myeks2 --query 'Stacks[*].Outputs[0].OutputValue' --output text)배포 전 사전 확인 & eks-node-viewer 설치

# IP 주소 확인 : 172.30.0.0/16 VPC 대역에서 172.30.1.0/24 대역을 사용 중

ip -br -c addr

lo UNKNOWN 127.0.0.1/8 ::1/128

eth0 UP 172.30.1.100/24 fe80::af:cfff:fe26:471a/64

docker0 DOWN 172.17.0.1/16

# EKS Node Viewer 설치 : 현재 ec2 spec에서는 설치에 다소 시간이 소요됨 = 2분 이상

go install github.com/awslabs/eks-node-viewer/cmd/eks-node-viewer@latest

# [터미널1] bin 확인 및 사용

tree ~/go/bin

/root/go/bin

└── eks-node-viewer

cd ~/go/bin

./eks-node-viewer -h

./eks-node-viewer # EKS 배포 완료 후 실행 한다.

EKS 배포

# 환경변수 정보 확인

export | egrep 'ACCOUNT|AWS_|CLUSTER' | egrep -v 'SECRET|KEY'

declare -x ACCOUNT_ID="6118xxxxxxxx"

declare -x AWS_ACCOUNT_ID="6118xxxxxxxx"

declare -x AWS_DEFAULT_REGION="ap-northeast-2"

declare -x AWS_PAGER=""

declare -x AWS_REGION="ap-northeast-2"

declare -x CLUSTER_NAME="myeks2"

# 환경변수 설정

export KARPENTER_VERSION=v0.27.5

export TEMPOUT=$(mktemp)

echo $KARPENTER_VERSION $CLUSTER_NAME $AWS_DEFAULT_REGION $AWS_ACCOUNT_ID $TEMPOUT

v0.27.5 myeks2 ap-northeast-2 6118xxxxxxxx /tmp/tmp.YozE1Ycm0C

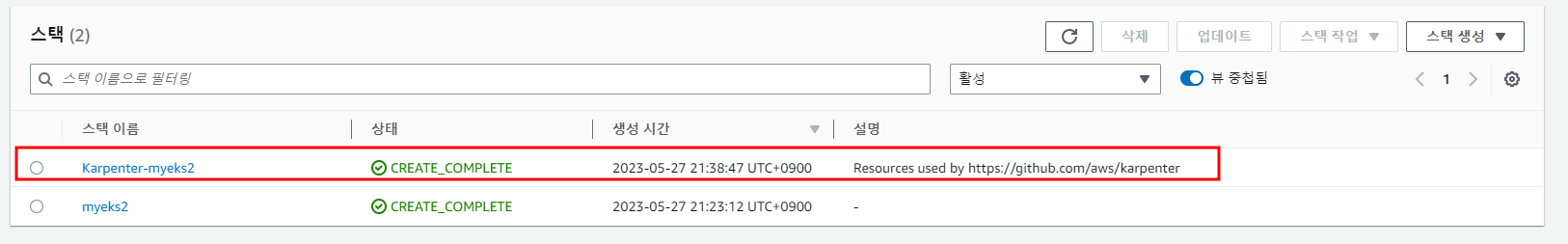

# CloudFormation 스택으로 IAM Policy, Role, EC2 Instance Profile 생성 : 3분 정도 소요

curl -fsSL https://karpenter.sh/"${KARPENTER_VERSION}"/getting-started/getting-started-with-karpenter/cloudformation.yaml > $TEMPOUT \

&& aws cloudformation deploy \

--stack-name "Karpenter-${CLUSTER_NAME}" \

--template-file "${TEMPOUT}" \

--capabilities CAPABILITY_NAMED_IAM \

--parameter-overrides "ClusterName=${CLUSTER_NAME}"

Waiting for changeset to be created..

Waiting for stack create/update to complete

# 클러스터 생성 : myeks2 EKS 클러스터 생성 19분 정도 소요

eksctl create cluster -f - <<EOF

---

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: ${CLUSTER_NAME}

region: ${AWS_DEFAULT_REGION}

version: "1.24"

tags:

karpenter.sh/discovery: ${CLUSTER_NAME}

iam:

withOIDC: true

serviceAccounts:

- metadata:

name: karpenter

namespace: karpenter

roleName: ${CLUSTER_NAME}-karpenter

attachPolicyARNs:

- arn:aws:iam::${AWS_ACCOUNT_ID}:policy/KarpenterControllerPolicy-${CLUSTER_NAME}

roleOnly: true

iamIdentityMappings:

- arn: "arn:aws:iam::${AWS_ACCOUNT_ID}:role/KarpenterNodeRole-${CLUSTER_NAME}"

username: system:node:{{EC2PrivateDNSName}}

groups:

- system:bootstrappers

- system:nodes

managedNodeGroups:

- instanceType: m5.large

amiFamily: AmazonLinux2

name: ${CLUSTER_NAME}-ng

desiredCapacity: 2

minSize: 1

maxSize: 10

iam:

withAddonPolicies:

externalDNS: true

## Optionally run on fargate

# fargateProfiles:

# - name: karpenter

# selectors:

# - namespace: karpenter

EOF

# eks 배포 확인

eksctl get cluster

NAME REGION EKSCTL CREATED

myeks2 ap-northeast-2 True

eksctl get nodegroup --cluster $CLUSTER_NAME

CLUSTER NODEGROUP STATUS CREATED MIN SIZE MAX SIZE DESIRED CAPACITY INSTANCE TYPE IMAGE ID ASG NAME TYPE

myeks2 myeks2-ng ACTIVE 2023-05-27T12:54:35Z 1 10 2 m5.largAL2_x86_64 eks-myeks2-ng-8cc42ea3-2dac-d8ad-3c47-01281fb80a85 managed

eksctl get iamidentitymapping --cluster $CLUSTER_NAME

ARN USERNAME GROUPS ACCOUNT

arn:aws:iam::6118xxxxxxxx:role/KarpenterNodeRole-myeks2 system:node:{{EC2PrivateDNSName}} system:bootstrappers,system:nodes

arn:aws:iam::6118xxxxxxxx:role/eksctl-myeks2-nodegroup-myeks2-ng-NodeInstanceRole-1AO1RP791CI6D system:node:{{EC2PrivateDNSName}} system:bootstrappers,system:nodes

eksctl get iamserviceaccount --cluster $CLUSTER_NAME

NAMESPACE NAME ROLE ARN

karpenter karpenter arn:aws:iam::6118xxxxxxxx:role/myeks2-karpenter

kube-system aws-node arn:aws:iam::6118xxxxxxxx:role/eksctl-myeks2-addon-iamserviceaccount-kube-s-Role1-18IBTU567ZTXC

eksctl get addon --cluster $CLUSTER_NAME

# [터미널1] eks-node-viewer

cd ~/go/bin && ./eks-node-viewer

# k8s 확인

kubectl cluster-info

kubectl get node --label-columns=node.kubernetes.io/instance-type,eks.amazonaws.com/capacityType,topology.kubernetes.io/zone

kubectl get pod -n kube-system -owide

kubectl describe cm -n kube-system aws-auth

...

mapRoles:

----

- groups:

- system:bootstrappers

- system:nodes

rolearn: arn:aws:iam::911283464785:role/KarpenterNodeRole-myeks2

username: system:node:{{EC2PrivateDNSName}}

- groups:

- system:bootstrappers

- system:nodes

rolearn: arn:aws:iam::911283464785:role/eksctl-myeks2-nodegroup-myeks2-ng-NodeInstanceRole-1KDXF4FLKKX1B

username: system:node:{{EC2PrivateDNSName}}

...

# 카펜터 설치를 위한 환경 변수 설정 및 확인

export CLUSTER_ENDPOINT="$(aws eks describe-cluster --name ${CLUSTER_NAME} --query "cluster.endpoint" --output text)"

export KARPENTER_IAM_ROLE_ARN="arn:aws:iam::${AWS_ACCOUNT_ID}:role/${CLUSTER_NAME}-karpenter"

echo $CLUSTER_ENDPOINT $KARPENTER_IAM_ROLE_ARN

# service-linked-role 생성 확인 : 만들어있는것을 확인하는 거라 아래 에러 출력이 정상!

# If the role has already been successfully created, you will see:

# An error occurred (InvalidInput) when calling the CreateServiceLinkedRole operation: Service role name AWSServiceRoleForEC2Spot has been taken in this account, please try a different suffix.

aws iam create-service-linked-role --aws-service-name spot.amazonaws.com || true

# docker logout : Logout of docker to perform an unauthenticated pull against the public ECR

docker logout public.ecr.aws

# karpenter 설치

helm upgrade --install karpenter oci://public.ecr.aws/karpenter/karpenter --version ${KARPENTER_VERSION} --namespace karpenter --create-namespace \

--set serviceAccount.annotations."eks\.amazonaws\.com/role-arn"=${KARPENTER_IAM_ROLE_ARN} \

--set settings.aws.clusterName=${CLUSTER_NAME} \

--set settings.aws.defaultInstanceProfile=KarpenterNodeInstanceProfile-${CLUSTER_NAME} \

--set settings.aws.interruptionQueueName=${CLUSTER_NAME} \

--set controller.resources.requests.cpu=1 \

--set controller.resources.requests.memory=1Gi \

--set controller.resources.limits.cpu=1 \

--set controller.resources.limits.memory=1Gi \

--wait

# 확인

kubectl get-all -n karpenter

kubectl get all -n karpenter

NAME READY STATUS RESTARTS AGE

pod/karpenter-6c6bdb7766-mcbpq 1/1 Running 0 62m

pod/karpenter-6c6bdb7766-v2kf8 1/1 Running 0 62m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/karpenter ClusterIP 10.100.218.180 <none> 8080/TCP,443/TCP 62m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/karpenter 2/2 2 2 62m

NAME DESIRED CURRENT READY AGE

replicaset.apps/karpenter-6c6bdb7766 2 2 2 62m

kubectl get cm -n karpenter karpenter-global-settings -o jsonpath={.data} | jq

kubectl get crd | grep karpenter

옵션 : ExternalDNS, kube-ops-view

# ExternalDNS

MyDomain=<자신의 도메인>

echo "export MyDomain=<자신의 도메인>" >> /etc/profile

MyDomain=gasida.link

echo "export MyDomain=gasida.link" >> /etc/profile

MyDnzHostedZoneId=$(aws route53 list-hosted-zones-by-name --dns-name "${MyDomain}." --query "HostedZones[0].Id" --output text)

echo $MyDomain, $MyDnzHostedZoneId

curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/aews/externaldns.yaml

MyDomain=$MyDomain MyDnzHostedZoneId=$MyDnzHostedZoneId envsubst < externaldns.yaml | kubectl apply -f -

# kube-ops-view

helm repo add geek-cookbook https://geek-cookbook.github.io/charts/

helm install kube-ops-view geek-cookbook/kube-ops-view --version 1.2.2 --set env.TZ="Asia/Seoul" --namespace kube-system

kubectl patch svc -n kube-system kube-ops-view -p '{"spec":{"type":"LoadBalancer"}}'

kubectl annotate service kube-ops-view -n kube-system "external-dns.alpha.kubernetes.io/hostname=kubeopsview.$MyDomain"

echo -e "Kube Ops View URL = http://kubeopsview.$MyDomain:8080/#scale=1.5"

Create Provisioner

관리 리소스는 securityGroupSelector and subnetSelector로 찾는다.

ttlSecondsAfterEmpty(미사용 노드 정리, 데몬셋 제외)는 미사용 노드를 정리해준다.

#

cat <<EOF | kubectl apply -f -

apiVersion: karpenter.sh/v1alpha5

kind: Provisioner

metadata:

name: default

spec:

requirements:

- key: karpenter.sh/capacity-type

operator: In

values: ["spot"]

limits:

resources:

cpu: 1000

providerRef:

name: default

ttlSecondsAfterEmpty: 30

---

apiVersion: karpenter.k8s.aws/v1alpha1

kind: AWSNodeTemplate

metadata:

name: default

spec:

subnetSelector:

karpenter.sh/discovery: ${CLUSTER_NAME}

securityGroupSelector:

karpenter.sh/discovery: ${CLUSTER_NAME}

EOF

# 확인

kubectl get awsnodetemplates,provisioners

NAME AGE

awsnodetemplate.karpenter.k8s.aws/default 21m

NAME AGE

provisioner.karpenter.sh/default 21m

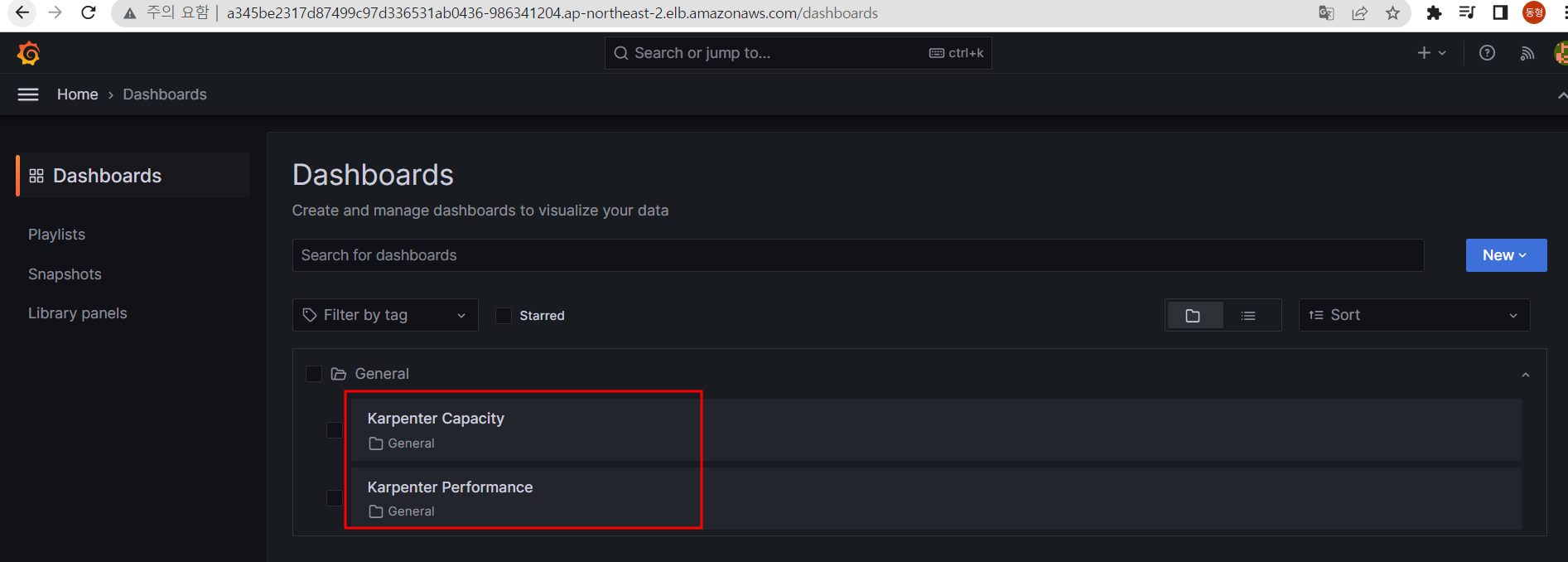

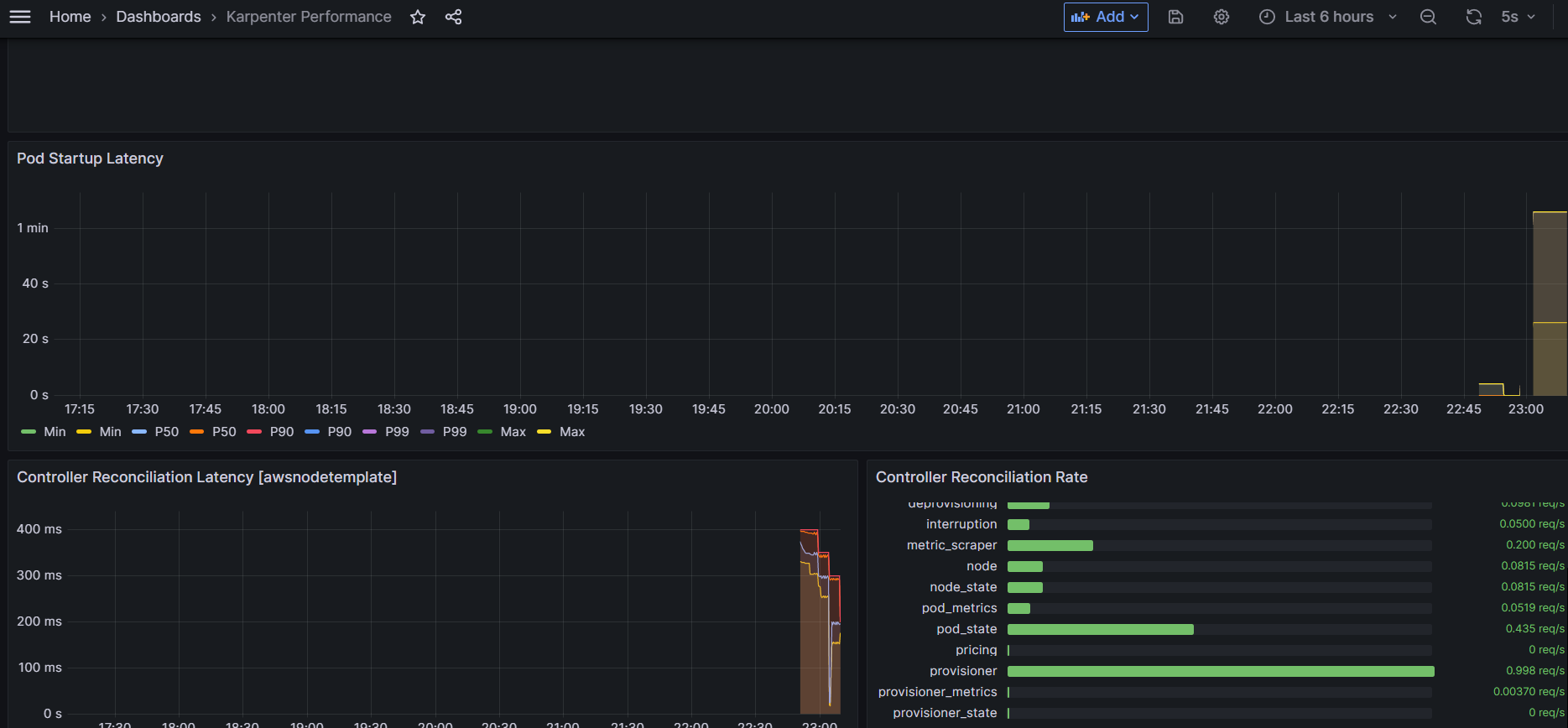

Add optional monitoring with Grafana : 대시보드

#

helm repo add grafana-charts https://grafana.github.io/helm-charts

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

helm repo update

kubectl create namespace monitoring

# 프로메테우스 설치

curl -fsSL https://karpenter.sh/"${KARPENTER_VERSION}"/getting-started/getting-started-with-karpenter/prometheus-values.yaml | tee prometheus-values.yaml

helm install --namespace monitoring prometheus prometheus-community/prometheus --values prometheus-values.yaml --set alertmanager.enabled=false

# 그라파나 설치

curl -fsSL https://karpenter.sh/"${KARPENTER_VERSION}"/getting-started/getting-started-with-karpenter/grafana-values.yaml | tee grafana-values.yaml

helm install --namespace monitoring grafana grafana-charts/grafana --values grafana-values.yaml --set service.type=LoadBalancer

# admin 암호

kubectl get secret --namespace monitoring grafana -o jsonpath="{.data.admin-password}" | base64 --decode ; echo

Ec8g6KciGzvSFPIdhWdQlQZzllhey9uZ8CMmlPVR

# 그라파나 접속

kubectl annotate service grafana -n monitoring "external-dns.alpha.kubernetes.io/hostname=grafana.$MyDomain"

echo -e "grafana URL = http://grafana.$MyDomain"

# pause 파드 1개에 CPU 1개 최소 보장 할당

cat <<EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: inflate

spec:

replicas: 0

selector:

matchLabels:

app: inflate

template:

metadata:

labels:

app: inflate

spec:

terminationGracePeriodSeconds: 0

containers:

- name: inflate

image: public.ecr.aws/eks-distro/kubernetes/pause:3.7

resources:

requests:

cpu: 1

EOF

kubectl scale deployment inflate --replicas 5

kubectl logs -f -n karpenter -l app.kubernetes.io/name=karpenter -c controller

...

2023/05/27 13:04:04 Registering 2 clients

2023/05/27 13:04:04 Registering 2 informer factories

2023/05/27 13:04:04 Registering 3 informers

2023/05/27 13:04:04 Registering 5 controllers

2023-05-27T13:04:04.633Z INFO controller Starting server {"commit": "698f22f-dirty", "path": "/metrics", "kind": "metrics", "addr": "[::]:8080"}

2023-05-27T13:04:04.633Z INFO controller Starting server {"commit": "698f22f-dirty", "kind": "health probe", "addr": "[::]:8081"}

I0527 13:04:04.734300 1 leaderelection.go:248] attempting to acquire leader lease karpenter/karpenter-leader-election...

2023-05-27T13:04:04.799Z INFO controller Starting informers... {"commit": "698f22f-dirty"}

# 스팟 인스턴스 확인!

aws ec2 describe-spot-instance-requests --filters "Name=state,Values=active" --output table

kubectl get node -l karpenter.sh/capacity-type=spot -o jsonpath='{.items[0].metadata.labels}' | jq

kubectl get node --label-columns=eks.amazonaws.com/capacityType,karpenter.sh/capacity-type,node.kubernetes.io/instance-type

NAME STATUS ROLES AGE VERSION CAPACITYTYPE CAPACITY-TYPE INSTANCE-TYPE

ip-192-168-25-56.ap-northeast-2.compute.internal Ready <none> 72m v1.24.13-eks-0a21954 ON_DEMAND

m5.large

ip-192-168-50-95.ap-northeast-2.compute.internal Ready <none> 72m v1.24.13-eks-0a21954 ON_DEMAND

m5.large

ip-192-168-67-200.ap-northeast-2.compute.internal Ready <none> 8m8s v1.24.13-eks-0a21954 spot c4.2xlarge- inflate 파드를 Replicas를 5로 늘리면 리소스 부족으로 Pending이 발생하고 Karpenter가 스케쥴링 안된 Pod를 감지하여 자동으로 Node를 확장한다.

Scale down deployment

- ttlSecondsAfterEmpty 30초

inflate Pod를 삭제하면 Karpenter의 설정 값인 ttlSecondsAfterEmpty 30초가 지나면 노드가 축소된다.

# Now, delete the deployment. After 30 seconds (ttlSecondsAfterEmpty), Karpenter should terminate the now empty nodes.

kubectl delete deployment inflate

kubectl logs -f -n karpenter -l app.kubernetes.io/name=karpenter -c controller

kubectl get node --label-columns=eks.amazonaws.com/capacityType,karpenter.sh/capacity-type,node.kubernetes.io/instance-type

NAME STATUS ROLES AGE VERSION CAPACITYTYPE CAPACITY-TYPE INSTANCE-TYPE

ip-192-168-25-56.ap-northeast-2.compute.internal Ready <none> 76m v1.24.13-eks-0a21954 ON_DEMAND

m5.large

ip-192-168-50-95.ap-northeast-2.compute.internal Ready <none> 76m v1.24.13-eks-0a21954 ON_DEMAND

m5.large

Consolidation

컴퓨팅에서 워크로드를 실행하는 방법의 전반적인 효율성이 향상되어 오버헤드가 줄어들고 비용이 절감된다.

#

kubectl delete provisioners default

cat <<EOF | kubectl apply -f -

apiVersion: karpenter.sh/v1alpha5

kind: Provisioner

metadata:

name: default

spec:

consolidation:

enabled: true

labels:

type: karpenter

limits:

resources:

cpu: 1000

memory: 1000Gi

providerRef:

name: default

requirements:

- key: karpenter.sh/capacity-type

operator: In

values:

- on-demand

- key: node.kubernetes.io/instance-type

operator: In

values:

- c5.large

- m5.large

- m5.xlarge

EOF

#

cat <<EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: inflate

spec:

replicas: 0

selector:

matchLabels:

app: inflate

template:

metadata:

labels:

app: inflate

spec:

terminationGracePeriodSeconds: 0

containers:

- name: inflate

image: public.ecr.aws/eks-distro/kubernetes/pause:3.7

resources:

requests:

cpu: 1

EOF

kubectl scale deployment inflate --replicas 12

kubectl logs -f -n karpenter -l app.kubernetes.io/name=karpenter -c controller

# 인스턴스 확인

# This changes the total memory request for this deployment to around 12Gi,

# which when adjusted to account for the roughly 600Mi reserved for the kubelet on each node means that this will fit on 2 instances of type m5.large:

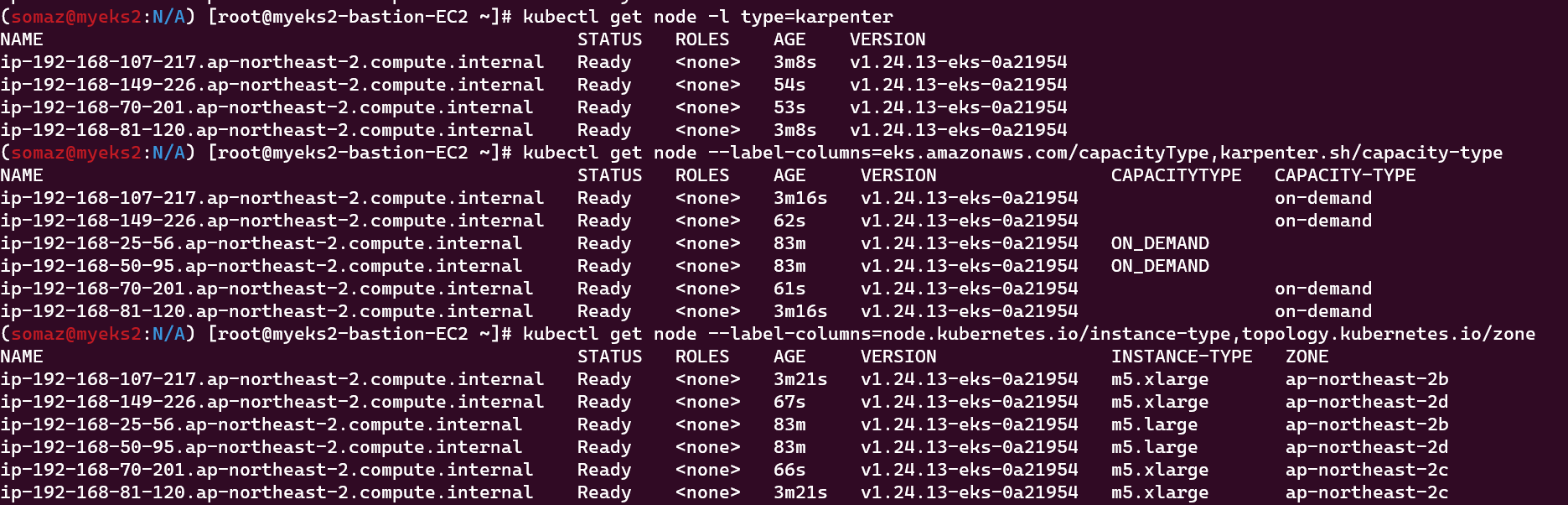

kubectl get node -l type=karpenter

NAME STATUS ROLES AGE VERSION

ip-192-168-107-217.ap-northeast-2.compute.internal Ready <none> 3m8s v1.24.13-eks-0a21954

ip-192-168-149-226.ap-northeast-2.compute.internal Ready <none> 54s v1.24.13-eks-0a21954

ip-192-168-70-201.ap-northeast-2.compute.internal Ready <none> 53s v1.24.13-eks-0a21954

ip-192-168-81-120.ap-northeast-2.compute.internal Ready <none> 3m8s v1.24.13-eks-0a21954

kubectl get node --label-columns=eks.amazonaws.com/capacityType,karpenter.sh/capacity-type

kubectl get node --label-columns=node.kubernetes.io/instance-type,topology.kubernetes.io/zone

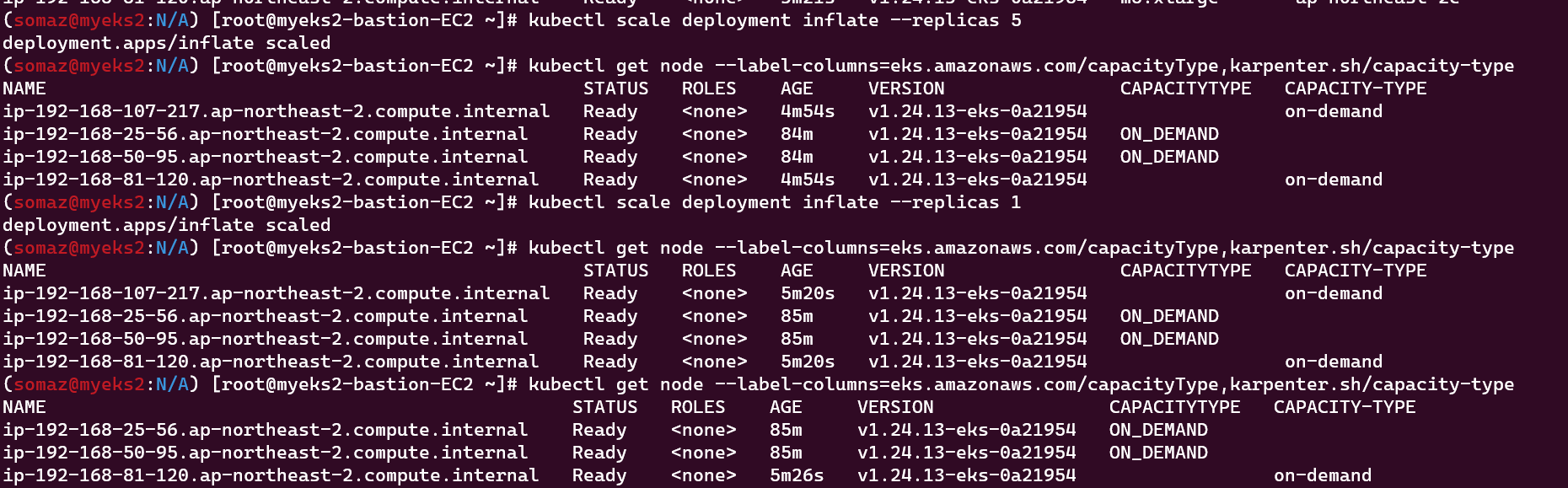

# Next, scale the number of replicas back down to 5:

kubectl scale deployment inflate --replicas 5

# The output will show Karpenter identifying specific nodes to cordon, drain and then terminate:

kubectl logs -f -n karpenter -l app.kubernetes.io/name=karpenter -c controller

2023-05-17T07:02:00.768Z INFO controller.deprovisioning deprovisioning via consolidation delete, terminating 1 machines ip-192-168-14-81.ap-northeast-2.compute.internal/m5.xlarge/on-demand {"commit": "d7e22b1-dirty"}

2023-05-17T07:02:00.803Z INFO controller.termination cordoned node {"commit": "d7e22b1-dirty", "node": "ip-192-168-14-81.ap-northeast-2.compute.internal"}

2023-05-17T07:02:01.320Z INFO controller.termination deleted node {"commit": "d7e22b1-dirty", "node": "ip-192-168-14-81.ap-northeast-2.compute.internal"}

2023-05-17T07:02:39.283Z DEBUG controller deleted launch template {"commit": "d7e22b1-dirty", "launch-template": "karpenter.k8s.aws/9547068762493117560"}

# Next, scale the number of replicas back down to 1

kubectl scale deployment inflate --replicas 1

kubectl logs -f -n karpenter -l app.kubernetes.io/name=karpenter -c controller

2023-05-17T07:05:08.877Z INFO controller.deprovisioning deprovisioning via consolidation delete, terminating 1 machines ip-192-168-145-253.ap-northeast-2.compute.internal/m5.xlarge/on-demand {"commit": "d7e22b1-dirty"}

2023-05-17T07:05:08.914Z INFO controller.termination cordoned node {"commit": "d7e22b1-dirty", "node": "ip-192-168-145-253.ap-northeast-2.compute.internal"}

2023-05-17T07:05:09.316Z INFO controller.termination deleted node {"commit": "d7e22b1-dirty", "node": "ip-192-168-145-253.ap-northeast-2.compute.internal"}

2023-05-17T07:05:25.923Z INFO controller.deprovisioning deprovisioning via consolidation replace, terminating 1 machines ip-192-168-48-2.ap-northeast-2.compute.internal/m5.xlarge/on-demand and replacing with on-demand machine from types m5.large, c5.large {"commit": "d7e22b1-dirty"}

2023-05-17T07:05:25.940Z INFO controller.deprovisioning launching machine with 1 pods requesting {"cpu":"1125m","pods":"4"} from types m5.large, c5.large {"commit": "d7e22b1-dirty", "provisioner": "default"}

2023-05-17T07:05:26.341Z DEBUG controller.deprovisioning.cloudprovider created launch template {"commit": "d7e22b1-dirty", "provisioner": "default", "launch-template-name": "karpenter.k8s.aws/9547068762493117560", "launch-template-id": "lt-036151ea9df7d309f"}

2023-05-17T07:05:28.182Z INFO controller.deprovisioning.cloudprovider launched instance {"commit": "d7e22b1-dirty", "provisioner": "default", "id": "i-0eb3c8ff63724dc95", "hostname": "ip-192-168-144-98.ap-northeast-2.compute.internal", "instance-type": "c5.large", "zone": "ap-northeast-2b", "capacity-type": "on-demand", "capacity": {"cpu":"2","ephemeral-storage":"20Gi","memory":"3788Mi","pods":"29"}}

2023-05-17T07:06:12.307Z INFO controller.termination cordoned node {"commit": "d7e22b1-dirty", "node": "ip-192-168-48-2.ap-northeast-2.compute.internal"}

2023-05-17T07:06:12.856Z INFO controller.termination deleted node {"commit": "d7e22b1-dirty", "node": "ip-192-168-48-2.ap-northeast-2.compute.internal"}

# 인스턴스 확인

kubectl get node -l type=karpenter

kubectl get node --label-columns=eks.amazonaws.com/capacityType,karpenter.sh/capacity-type

kubectl get node --label-columns=node.kubernetes.io/instance-type,topology.kubernetes.io/zone

# 삭제

kubectl delete deployment inflate

실습 리소스 삭제

#

kubectl delete svc -n monitoring grafana

helm uninstall -n kube-system kube-ops-view

helm uninstall karpenter --namespace karpenter

# 위 삭제 완료 후 아래 삭제

aws ec2 describe-launch-templates --filters Name=tag:eks:cluster-name,Values=${CLUSTER_NAME} |

jq -r ".LaunchTemplates[].LaunchTemplateName" |

xargs -I{} aws ec2 delete-launch-template --launch-template-name {}

# 클러스터 삭제

eksctl delete cluster --name "${CLUSTER_NAME}"

#

aws cloudformation delete-stack --stack-name "Karpenter-${CLUSTER_NAME}"

# 위 삭제 완료 후 아래 삭제

aws cloudformation delete-stack --stack-name ${CLUSTER_NAME}

마무리

이번 주차 스터디는 Kubernetes 오토스케일링의 거의 모든 기능과 툴을 Hands-on으로 직접 실습해보는 가성비 최고의 시간이었다.

핵심 포인트 정리

- HPA는 CPU 기반으로, KEDA는 이벤트 기반으로, VPA는 request 조정으로, CA/Karpenter는 노드 단위로 각각 역할이 다르다.

- 모니터링 도구(Grafana + Prometheus)를 잘 활용하면 스케일링 상태를 직관적으로 확인 가능하다.

- Karpenter는 빠른 프로비저닝 + 비용 최적화 + 유연한 인프라 설계를 가능하게 해주는 차세대 오토스케일링 솔루션이다.

이제 EKS 오토스케일링에 대한 이론과 실습 경험을 모두 쌓았으니, 실제 운영 환경에 적용하는 데 한 걸음 더 가까워졌다.

Reference

(🧝🏻♂️)김태민 기술 블로그 - 링크

[EKS Workshop] : https://www.eksworkshop.com/docs/autoscaling/

[studio] HPA CA : https://catalog.us-east-1.prod.workshops.aws/workshops/9c0aa9ab-90a9-44a6-abe1-8dff360ae428/ko-KR/100-scaling

[blog] Karpenter 소개 – 오픈 소스 고성능 Kubernetes 클러스터 오토스케일러 - 링크

[Youtube] 오픈 소스 Karpenter를 활용한 Amazon EKS 확장 운영 전략 (신재현) 무신사 - 링크

[blog] Optimizing your Kubernetes compute costs with Karpenter consolidation - 링크

[blog] Scaling Kubernetes with Karpenter: Advanced Scheduling with Pod Affinity and Volume Topology Awareness - 링크

[blog] Introducing Karpenter – An Open-Source High-Performance Kubernetes Cluster Autoscaler - 링크

[K8S Docs] Horizontal Pod Autoscaling - 링크 & HorizontalPodAutoscaler Walkthrough - 링크

[Youtube] Workload Consolidation with Karpenter - 링크

'교육, 커뮤니티 후기 > AEWS 스터디' 카테고리의 다른 글

| AEWS 스터디 7주차 - EKS Automation (0) | 2023.06.08 |

|---|---|

| AEWS 스터디 6주차 - EKS Security (4) | 2023.06.03 |

| AEWS 스터디 4주차 - EKS Observability (0) | 2023.05.17 |

| AEWS 스터디 3주차 - EKS Storage & Node 관리 (1) | 2023.05.11 |

| AEWS 스터디 2주차 - EKS Networking (0) | 2023.05.01 |